2024 AI Inference Infrastructure Survey Highlights

Authors

Last Updated

Share

As AI becomes the cornerstone of modern business, organizations face crucial decisions about their infrastructure strategies. These span from leveraging managed APIs to developing in-house solutions. As the creators of BentoML, a Unified Inference Platform for building and scaling AI applications, we aim to help organizations make informed infrastructure decisions. To provide data-driven insights, we conducted a comprehensive survey from mid-December 2024 to mid-January 2025. The survey gathered insights from over 250 participants across diverse industries, exploring topics like deployment patterns, model usage, and infrastructure challenges. Our findings are detailed in the 2024 State of AI Inference Infrastructure Survey Report.

Download the Full ReportDownload the Full Report

This article presents the report's main findings and provides actionable recommendations to help organizations enhance their AI infrastructure strategies. For personalized guidance, consult our experts or participate in our Slack community, where AI practitioners exchange experiences and best practices.

Executive summary#

Our analysis reveals four key findings of the current state of AI infrastructure adoption and implementation:

-

Most organizations are in the early stages of their AI journey. Many companies are focused on building foundational AI capabilities. They are working with a limited number of models and dedicating significant resources to data preparation, training, and development. The foundational work is critical for creating scalable and sustainable AI solutions.

-

Hybrid deployment and multi-model strategies are on the rise. While serverless API endpoints are widely used, organizations are increasingly combining them with custom solutions. This hybrid approach also extends to model selection, with companies leveraging a diverse range of models, such as LLMs and multimodal models.

-

There’s a shift toward open-source and fine-tuned models. Open-source models are leading adoption as organizations fine-tune them for specific tasks, achieving better performance at lower costs. This shift indicates a growing desire for more control and customization in AI solutions.

-

Key infrastructure challenges center around three main areas:

- Deployment and maintenance complexity

- GPU availability and pricing

- Privacy and security concerns

These challenges highlight the need for tools that streamline AI infrastructure management while ensuring better privacy practices. Additionally, the growing demand for more advanced models, such as LLMs and multimodal models, is driving the need for mid- and high-end GPUs.

Now, let’s take a closer look at these key findings.

AI maturity: A focus on foundation building#

Our survey reveals that most organizations are still establishing their AI foundations before moving to full-scale production.

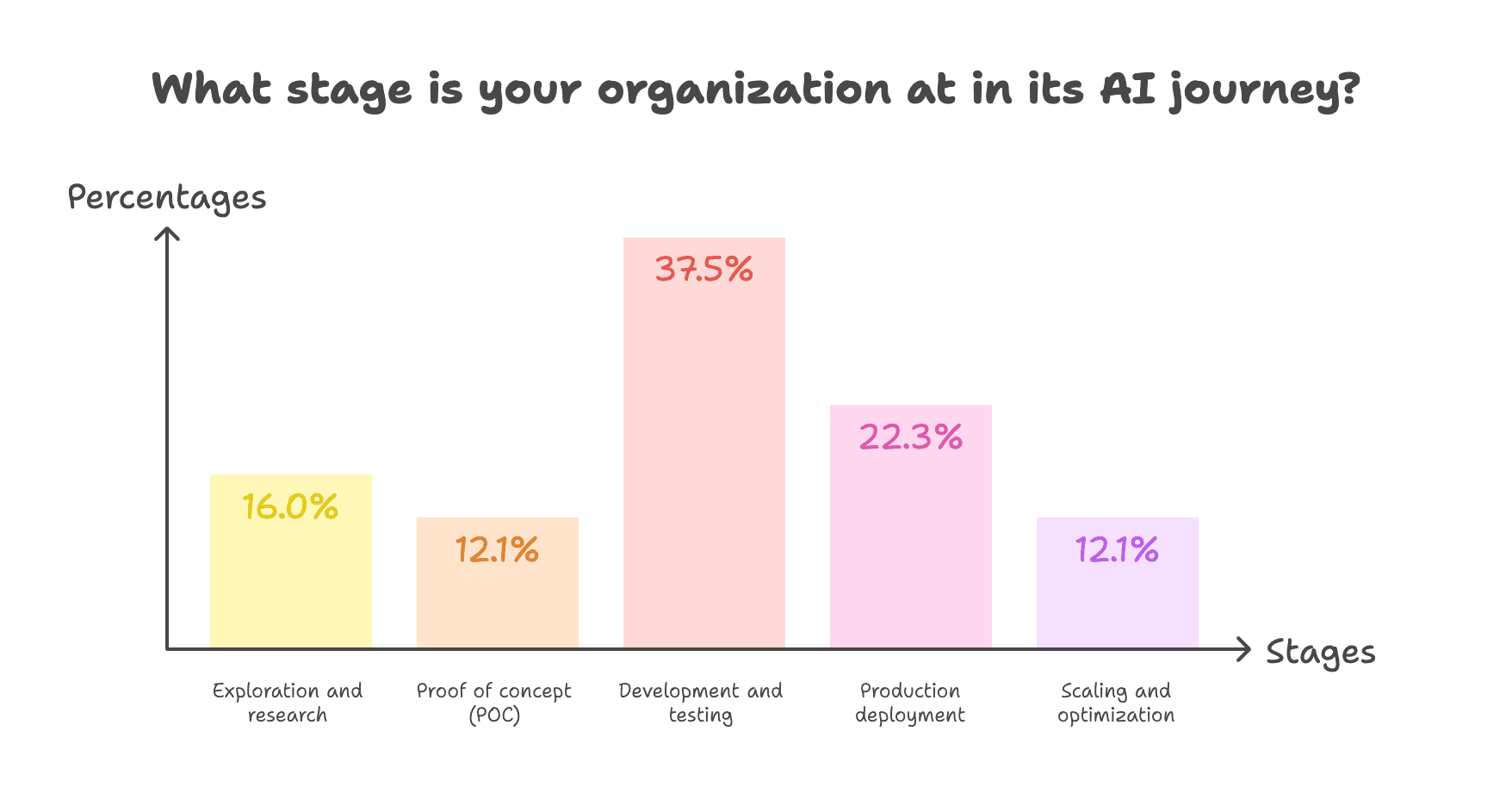

The maturity levels break down into three main categories:

- Early stages (28.1%): 16.0% of organizations are still in the exploration and research phase, while 12.1% are working on proof of concept projects.

- Development and testing (37.5%): This is the largest group, representing over a third of the organizations surveyed.

- Advanced stages (34.4%): Among those in more mature stages, 22.3% have moved to production deployment, and 12.1% are focused on scaling and optimization.

Although a significant portion of organizations have reached advanced stages, the majority are still working to strengthen their foundations. This distribution reflects both the complexity of AI implementation and the importance of establishing solid groundwork before moving to production.

The dominance of LLMs and multimodal AI#

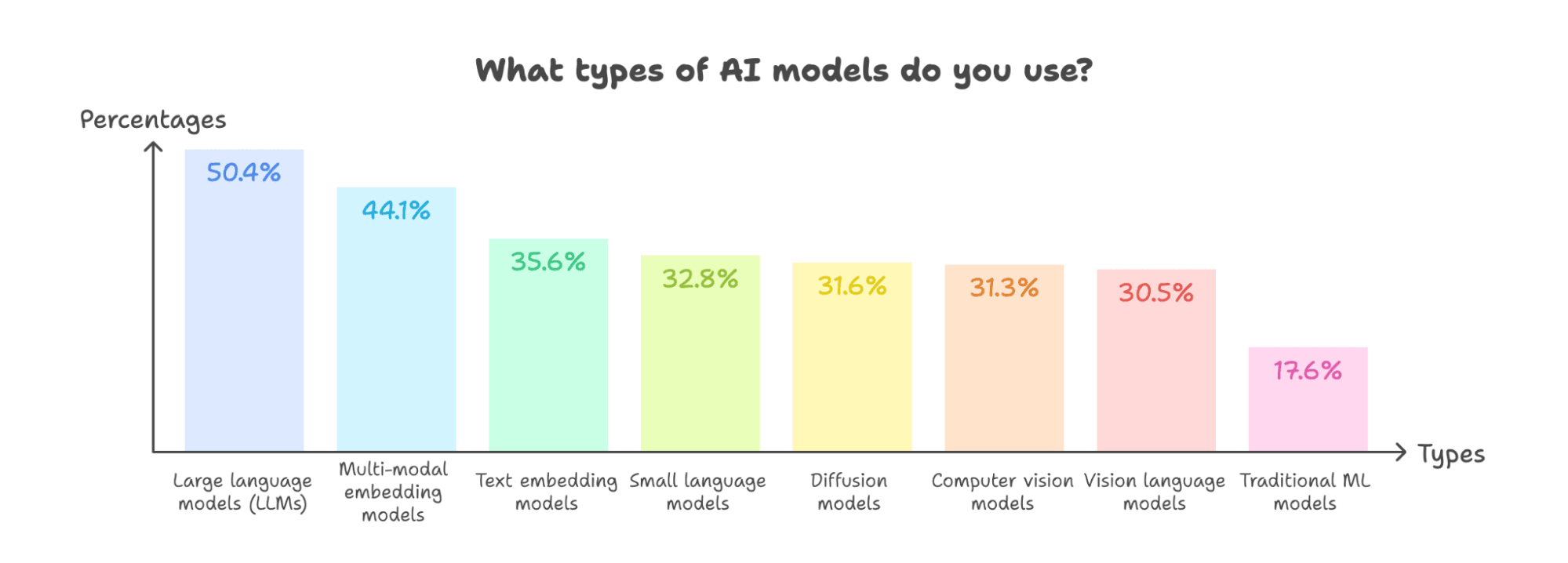

Our survey reveals a clear preference for generative AI, particularly models that can deliver richer and more dynamic user experiences. The emergence of hybrid model strategies reflects the growing need for flexible solutions to address complex, multi-faceted challenges.

-

LLMs lead adoption rates at 50.4%, establishing themselves as the backbone of modern AI applications. This leadership position likely stems from their crucial role in powering advanced systems like RAG and AI agents.

-

Multimodal capabilities are also gaining significant traction:

- Multimodal embedding models rank second at 44.1%.

- Computer vision models and vision language models each see roughly one-third of adoption (31.3% and 30.5%, respectively).

These statistics show that organizations are expanding beyond text-based AI applications. This trend supports our predictions about multimodal AI, in which emerging interaction paradigms, such as video chat in ChatGPT, are transforming user experiences. These new modalities represent not just additional features, but entirely new ways of engaging with AI.

-

Organizations are adopting hybrid model strategies. A significant majority (80.1%) use more than one model type, and over half (52.0%) are implementing three or more types. This means organizations are combining different AI capabilities to address varied use cases and requirements.

Deployment trends: From API-first to hybrid strategies#

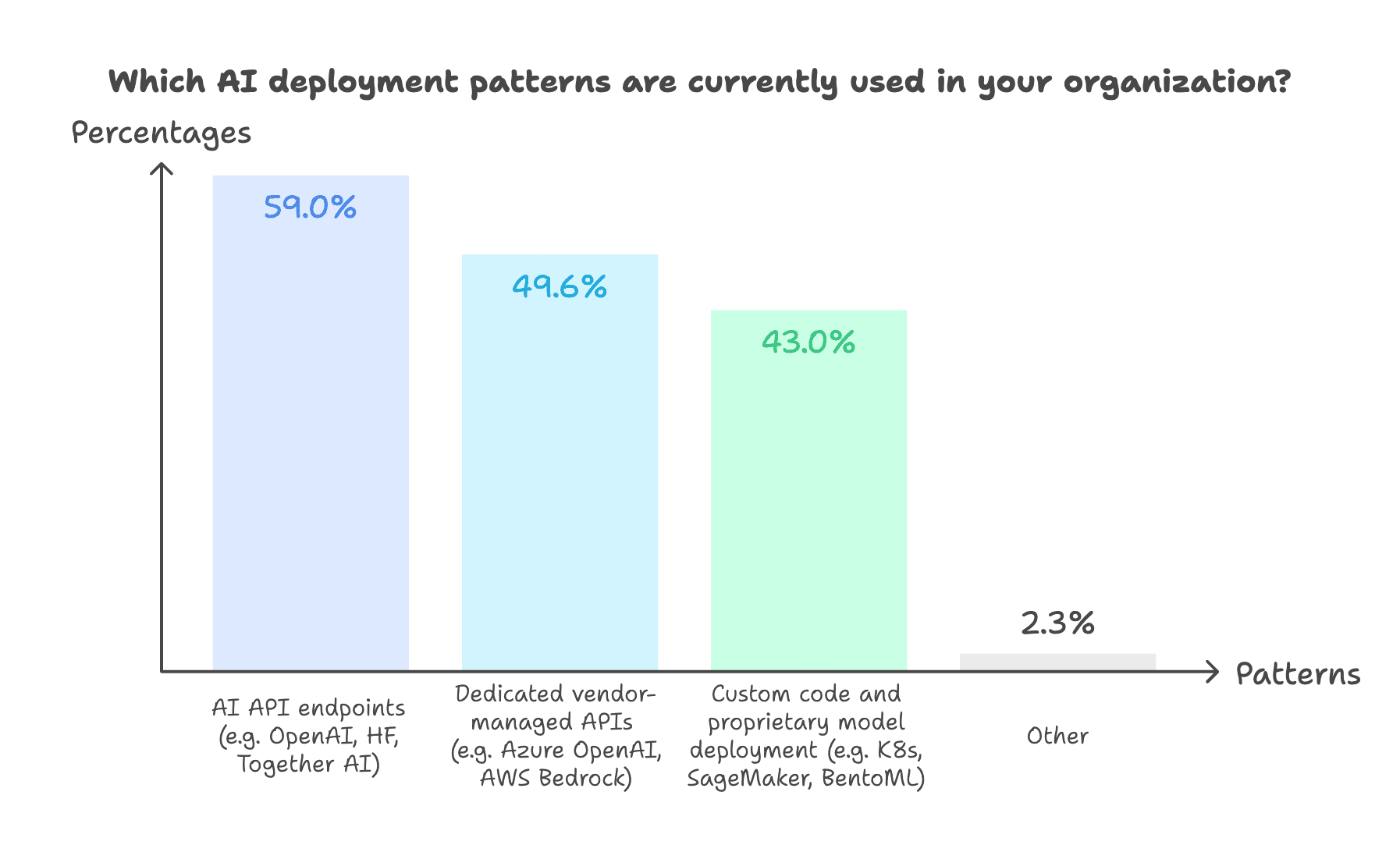

The survey reveals a clear preference for API-first deployment strategies. However, a hybrid approach that combines multiple deployment methods is also on the rise.

- API-first deployments dominate. 59.0% of respondents rely on AI API endpoints (e.g., OpenAI, Hugging Face, Together AI) for their AI deployments. Notably, 80.8% of them are small and medium-sized companies (fewer than 500 employees). This trend likely stems from the fact that AI APIs allow resource-constrained organizations to leverage advanced AI capabilities quickly. Freed from the complexities of infrastructure development and maintenance, organizations can expedite AI product deployment.

- Hybrid deployment strategies are emerging. Among the organizations using AI API endpoints, 40.4% also utilize dedicated vendor-managed APIs, and 33.8% choose custom code and proprietary model deployments. This suggests that some companies are taking a pragmatic, multi-layered approach to AI infrastructure. They aim to strike a balance between the ease of managed APIs and the adaptability of custom solutions.

-

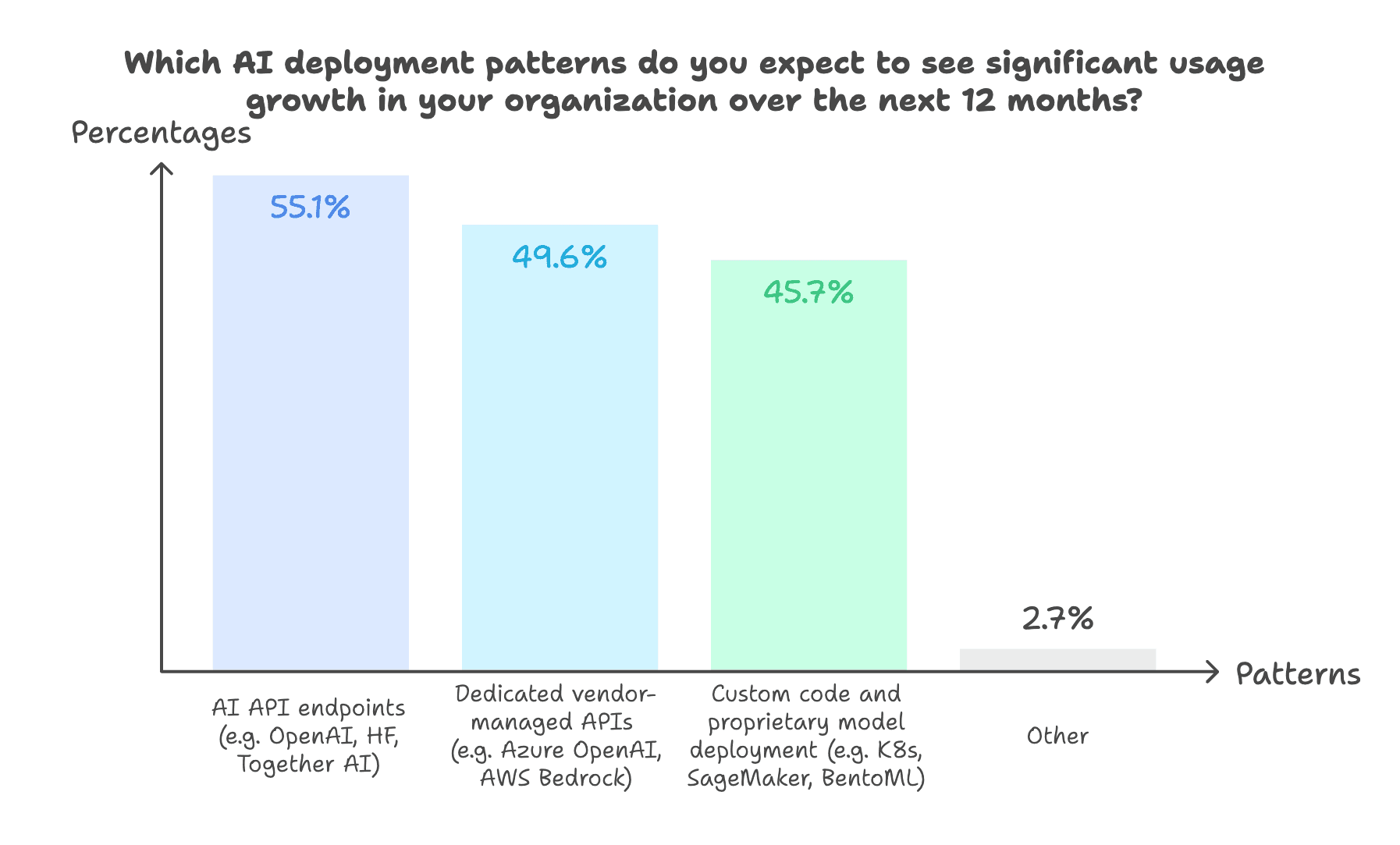

Strong growth is expected across all deployment patterns.

- 55.1% of respondents anticipate significant growth in AI API endpoint usage.

- 49.6% expect more adoption of dedicated vendor-managed APIs.

- 46.9% predict a rise in the adoption of multiple deployment options (i.e., selecting multiple deployment patterns) over the coming year.

While API-first approaches remain the priority for most organizations, some are also exploring a hybrid strategy. This probably means one-size-fits-all solutions are rare in AI. It’s likely that organizations are building custom, long-term infrastructure capabilities while leveraging simple API-based solutions to meet their current needs.

See our blog post to learn more about the choices between serverless and dedicated deployments.

Growing popularity of open-source and fine-tuned models#

More organizations are embracing a hybrid model strategy, showing a clear shift toward open-source and custom AI solutions.

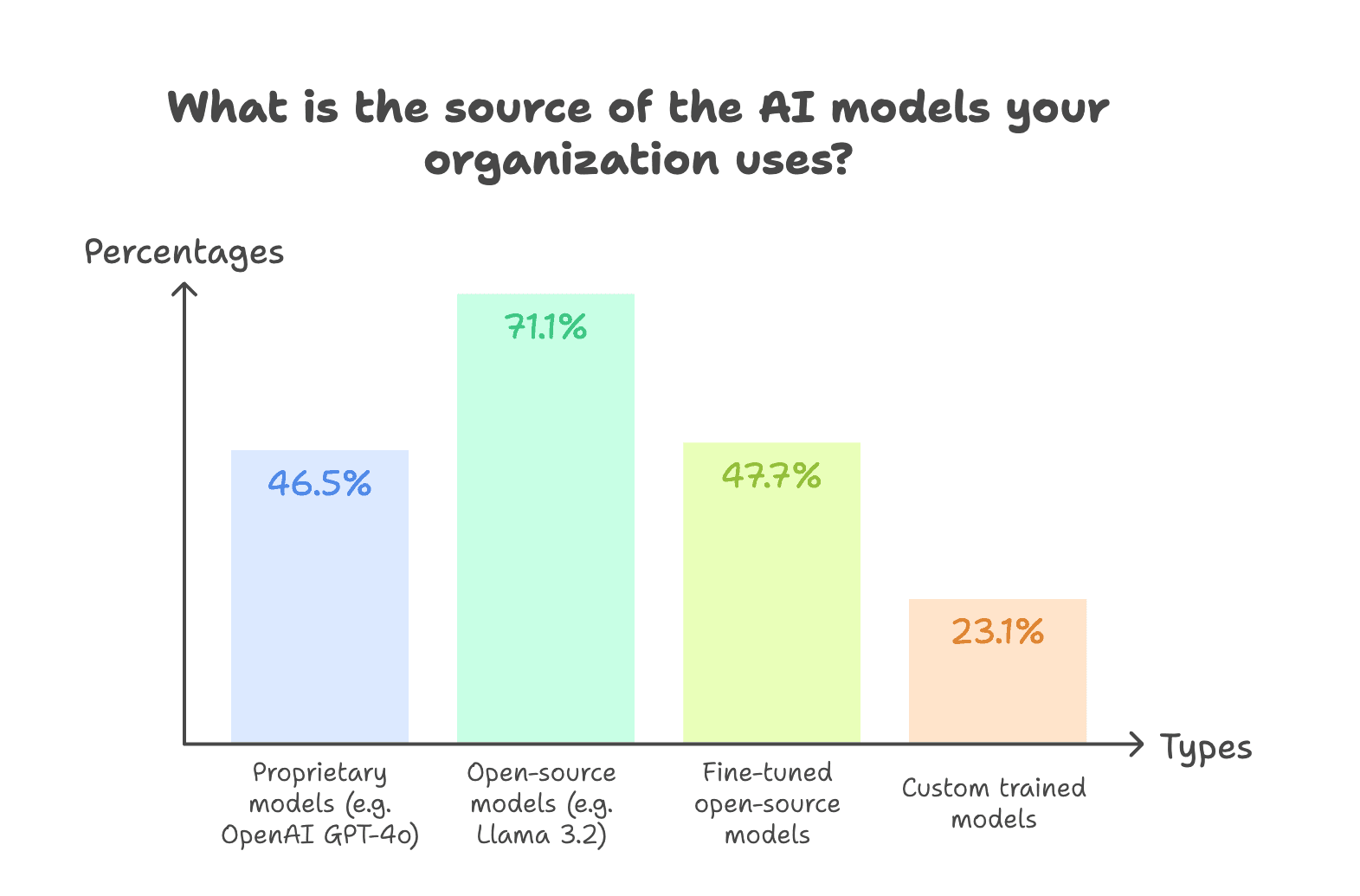

- Open-source models are at the forefront of adoption. Over 70% of respondents report using open-source models, indicating a strong preference for control and customization. This trend appears driven by the rapid advancement of open-source models like DeepSeek R1 and Llama 3.1 405B. They are steadily bridging the performance divide with proprietary models in specific tasks.

- Mixed model adoption is common. Approximately 63.3% of respondents use a combination of proprietary, open-source, and custom models. This allows them to leverage the strengths of different model types based on their specific use cases and requirements.

- Fine-tuned models are gaining momentum. Nearly half of respondents (47.7%) fine-tune open-source models. This means organizations are working to customize AI models for specific tasks to optimize performance while maintaining control over their solutions.

-

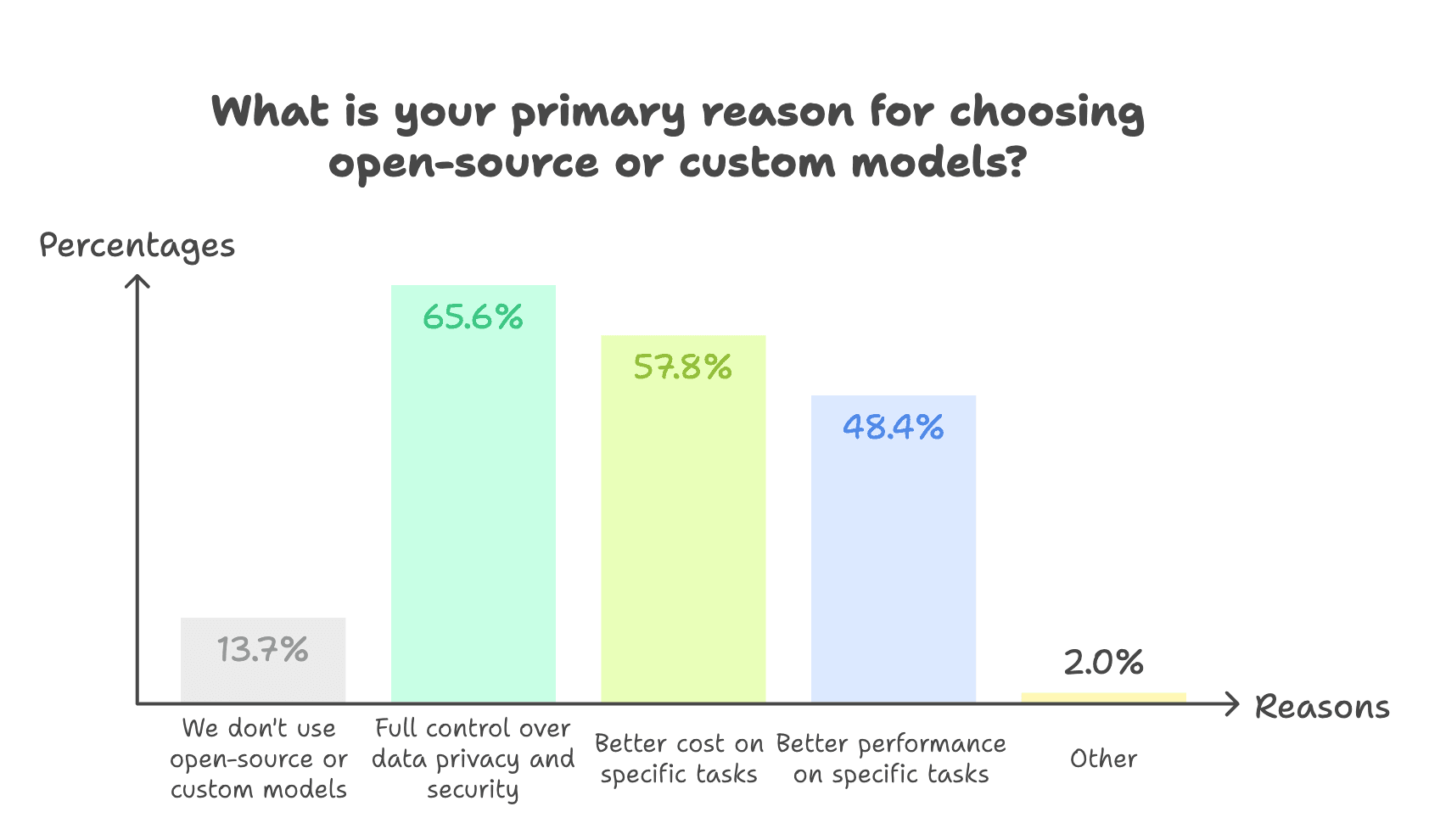

Data privacy and control are key drivers. 65.6% cite full control over data and privacy as the main reason for choosing open-source or custom models. These advantages allow organizations to:

- Maintain ownership of their data.

- Create tailored AI solutions that meet their unique requirements.

- Achieve better cost/performance ratios for specific tasks.

These results indicate that organizations are taking a strategic approach to model selection. They look to adopt the best tool for each task rather than relying on a one-size-fits-all solution. By balancing private and open-weight models, they can:

- Maintain flexibility while avoiding vendor lock-in.

- Ensure continuous access to the latest AI advancements.

- Gain a first-mover advantage and maintain a competitive edge.

Key challenges in AI infrastructure#

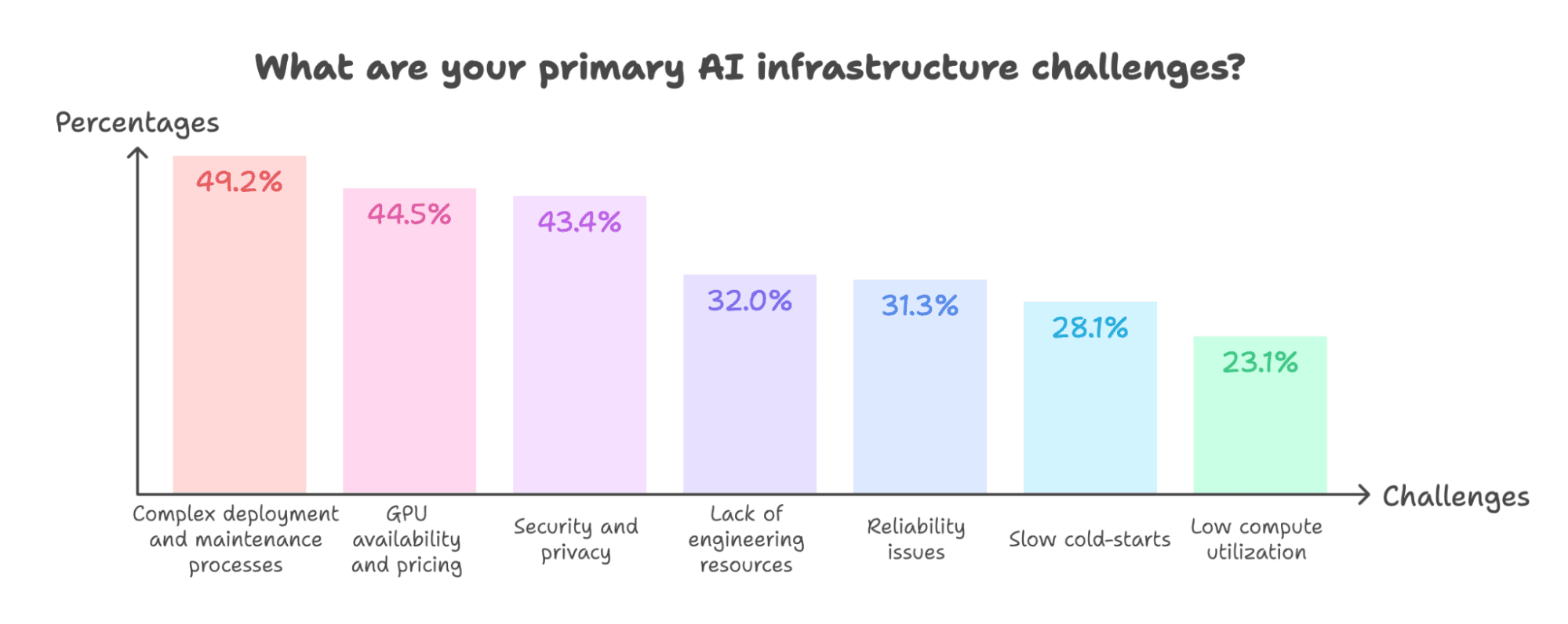

Organizations are faced with several challenges in AI infrastructure, with deployment complexity, GPU resources, and security being the top concerns.

- Deployment complexity is the top challenge, with 49.2% of respondents citing it as a major hurdle. This highlights the technical intricacies of AI systems, such as integrating with existing infrastructure and managing multiple model pipelines. It also means organizations need tools that simplify infrastructure management and reduce the complexity of AI adoption. This ties back to our earlier finding: the widespread use of AI API endpoints reflects a desire to avoid these complexities.

- GPU availability and pricing are another critical challenge, affecting 44.5% of respondents. This highlights the escalating computational demands as AI model adoption continues to grow. Additionally, it underscores the need for more flexible infrastructure solutions, such as platforms with multi-cloud support. Such platforms should empower organizations to select the cloud provider with optimal GPU availability and pricing tailored to their specific use cases.

- Security and privacy are top concerns for 43.4% of organizations. This increasing focus on data protection may drive greater demand for secure infrastructure solutions like Bring Your Own Cloud (BYOC).

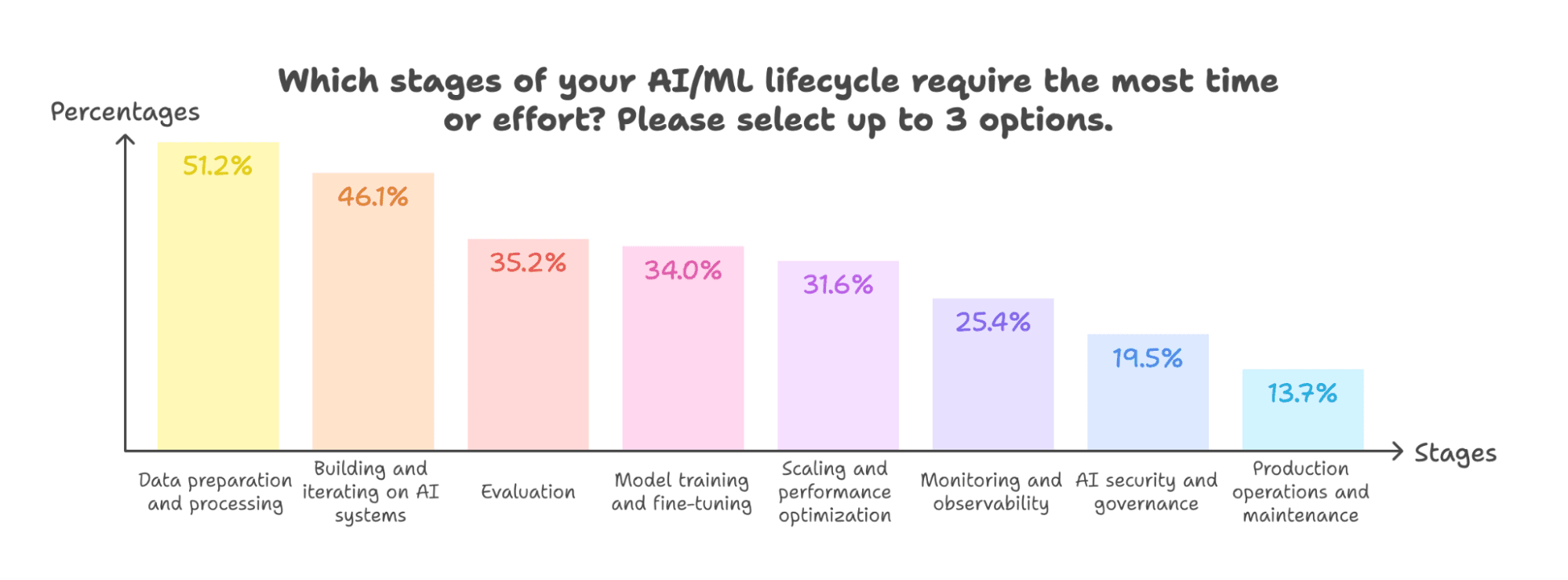

- Data preparation and processing remain the most time-consuming stage (51.2%). This underscores the difficulties in managing and cleaning data pipelines. Similar to our previous findings, it shows many organizations are still focused on establishing solid AI foundations.

- Building and iterating systems is the second most significant effort (46.1%). This points to the need for tools that accelerate model iteration and deployment, enabling faster experimentation and optimization.

It’s evident that many organizations are still working to lay the groundwork for their AI initiatives. As they move toward production, they require better tools to simplify deployment, iteration, and overall infrastructure management. In addition, some organizations need solutions that streamline model training, fine-tuning, observability, security, and operations.

Diverse infrastructure landscape: From cloud to on-premises#

-

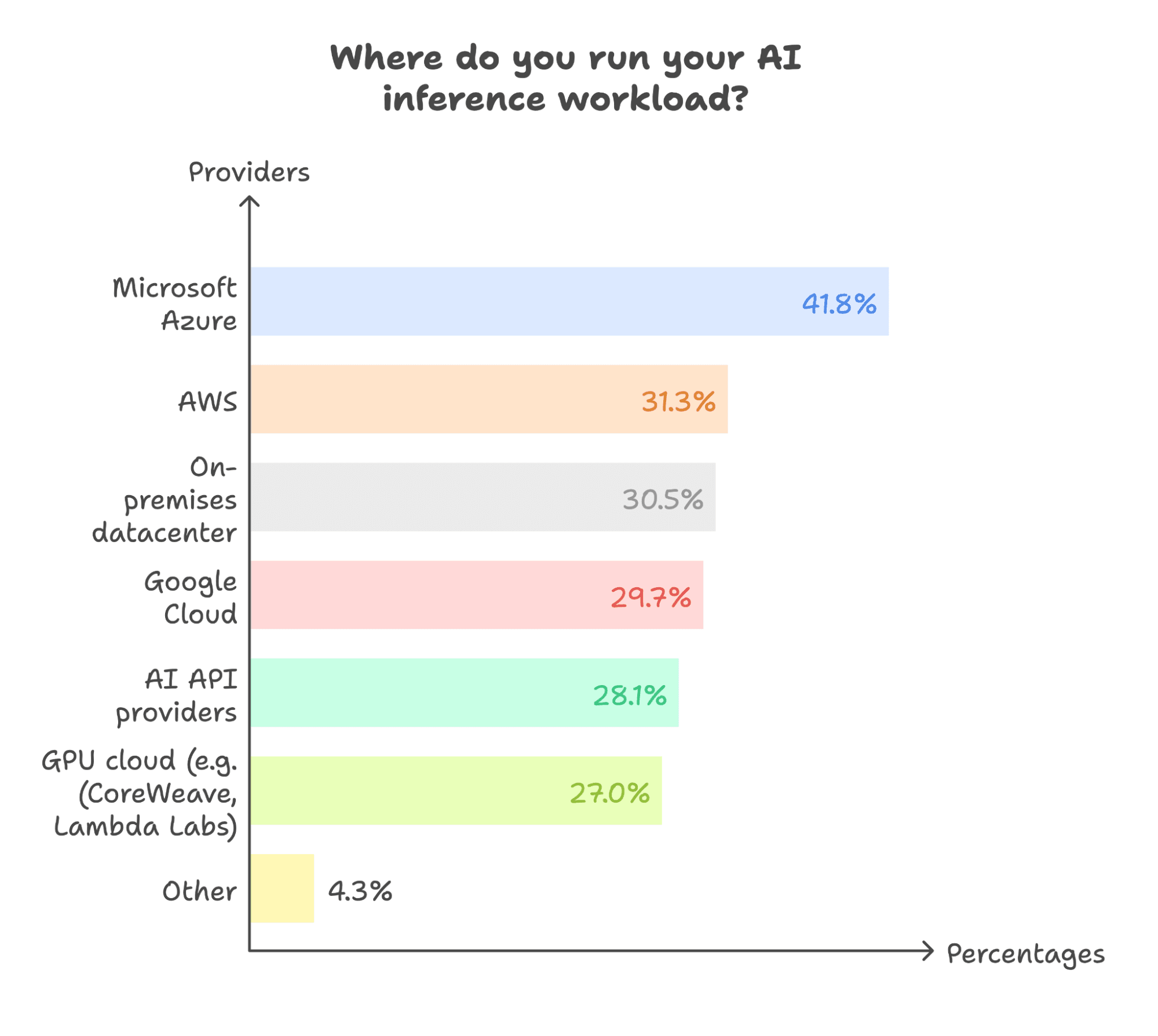

Public cloud adoption dominates:

- Microsoft Azure leads the usage (41.8%) but the competition remains strong. Others show comparable adoption rates with small gaps: AWS at 31.3%, on-premises at 30.5%, and Google Cloud at 29.7%.

- A notable 77.3% of respondents run their AI inference workloads on at least one public cloud (AWS, Google Cloud, or Microsoft Azure). Among these, 25.3% use at least two public clouds, and another 25.3% combine a public cloud with on-premises deployments. This suggests that some organizations are adopting a multi-cloud strategy or mixing cloud and on-premises solutions to optimize cost, performance, and control.

-

Multi-provider strategy is prevalent: A significant 62.1% of respondents run inference across multiple environments, reflecting the growing trend toward hybrid infrastructures. This approach allows organizations to capitalize on the distinct advantages offered by each cloud provider, including pricing benefits, GPU availability, and specialized AI services.

Recommendations#

Based on the survey findings, here are our key recommendations for organizations implementing AI solutions:

- Balance speed and control in your deployment strategy. Managed API endpoints are ideal for rapid prototyping and quick results. They enable fast deployment and experimentation without the overhead of managing infrastructure. However, to stay competitive, investing in custom code and model deployments is crucial. Combining these approaches helps reduce vendor dependency and provides greater control over your systems.

- Leverage open-source and custom models for greater control and customization. Open-source and custom models offer enhanced security, customization, and predictability. Fine-tuning these models with private datasets can deliver better performance on specific tasks at a lower cost. For mission-critical applications, they ensure more predictable and controllable behavior.

- Adopt tools that streamline infrastructure workflows while ensuring privacy. Platforms like BentoML accelerate time to market with simplified model deployment and iteration solutions. If security is a top priority for your organization, integrate privacy-first practices such as BYOC. This ensures your sensitive data never leaves your network boundary.

- Implement hybrid GPU strategies to balance performance and cost. As AI workloads, such as LLMs and multimodal models, become more demanding, optimizing GPU usage is essential. Organizations should consider hybrid GPU strategies that accommodate different model types and workloads. This could involve using multiple cloud providers to access the best pricing and GPU options for specific use cases.

More resources#

Building and scaling AI infrastructure is a complex journey, but you don't have to navigate it alone. The BentoML team is here to help future-proof your AI infrastructure with actionable insights and expert guidance. Check out these resources to accelerate your success:

- Download the full report for exclusive access to in-depth insights and comprehensive data analysis.

- Connect with our team for customized guidance on implementing AI infrastructure solutions.

- Be part of our Slack community of AI practitioners and engineers. Share experiences, get real-time support, and stay updated on best practices in AI infrastructure.