The Best Open-Source Embedding Models in 2026

Authors

Last Updated

Share

If you are building an AI-powered system for semantic search, recommendation engines, or information retrieval, you’re likely familiar with embedding models. These models are useful for transforming text, images, and other data types into vectors that capture semantic meaning. Embedding models help systems understand and retrieve relevant content based on similarity in meaning.

In this blog post, we’ll explore some of the top open-source embedding models and answer common questions about them.

Qwen3-Embedding-0.6B#

Qwen3-Embedding-0.6B is part of the latest embedding model series developed by the Qwen team. Built on the Qwen3 foundation model family, this model is designed specifically for tasks like semantic search, reranking, clustering, and classification. It’s multilingual, instruction-aware, and comes with flexible vector output dimensions, making it highly adaptable to different applications and resource constraints.

The Qwen3 embedding family also includes 4B and 8B models, which deliver even stronger performance. You can seamlessly integrate the embedding model with its reranker counterpart for full-stack semantic search systems.

Why choose it:

- Multilingual performance: Qwen3-Embedding supports over 100 natural and programming languages, making it a strong candidate for cross-lingual and multilingual applications. It performs particularly well in Chinese and English, as reflected in MTEB and C-MTEB evaluations.

- Instruction-aware architecture: Both the embedding and reranking models in the Qwen3 series support user-defined instructions. This means you can customize behavior for specific domains or tasks. The tests by the Qwen team showed that using instructions could typically yield an improvement of 1% to 5% compared to not using them.

- Flexible embedding dimensions: The model supports user-defined output dimensions ranging from 32 to 1024. This makes it ideal for deployments where resource efficiency or storage constraints are a concern.

Points to be cautious about:

- Prompt engineering matters: To achieve optimal results, especially in retrieval, you should include structured task instructions (e.g.,

Instruct: <task>\\nQuery: <query>). Omitting them can lead to performance drops.

EmbeddingGemma-300M#

Developed by Google DeepMind, EmbeddingGemma-300M is a lightweight multilingual text embedding model derived from Gemma 3. With just 300 million parameters, it’s optimized for on-device deployment, bringing high-quality text representations to laptops, tablets, and even mobile phones. Despite its compact size, it delivers strong performance across multilingual retrieval and semantic similarity tasks, rivaling much larger models on MTEB benchmarks.

Why choose it:

- Multilingual coverage: Trained on data spanning 100+ languages, EmbeddingGemma-300M supports cross-lingual and multilingual retrieval, clustering, and classification.

- Compact and efficient: The model can run in under 200MB RAM (with quantization) and generate embeddings in less than 22ms on EdgeTPU. It is an ideal option for real-time and edge applications.

- Flexible embedding dimensions: Through Matryoshka Representation Learning (MRL), EmbeddingGemma-300M supports output truncation from 768 → 512 → 256 → 128 dims, which is helpful for trading off storage versus precision.

Points to be cautious about:

- Precision requirements: EmbeddingGemma activations do not support float16. Use

float32orbfloat16as needed. - Limited context: It has a maximum input length of 2048 tokens, which is sufficient for most retrieval tasks but shorter than some larger embedding models.

Jina Embeddings v4#

Jina Embeddings v4 is a universal, multimodal, and multilingual embedding model developed by Jina AI. Built on the Qwen2.5-VL-3B-Instruct model, it is designed for complex retrieval scenarios that involve not only text but also images and visual documents such as charts, tables, or scanned pages.

Why choose it:

- Multilingual support: Jina Embeddings v4 supports over 30 languages and can be used for a wide range of domains, including technical and visually complex documents.

- Unified architecture: One model handles multiple modalities and tasks seamlessly, eliminating the need to maintain separate pipelines for text, image, and code retrieval.

- Flexible embedding types: It offers both dense (2048-dim) and multi-vector representations, with Matryoshka support for dimensionality reduction down to 128, 256, etc., allowing tradeoffs between performance and resource cost.

- Task-specific adapters: The model uses specialized adapters for retrieval, text matching, and code-related tasks, which can be dynamically selected at inference time.

Points to be cautious about:

- Non-commercial license: Jina Embeddings v4 is released under the CC-BY-NC-4.0 license, which restricts direct commercial usage. For commercial applications, you’ll need to use Jina’s managed API or contact their team.

- Adapter-specific tasks: The model uses different adapters for tasks like code and retrieval. While flexible, this requires some awareness during integration, especially if you're switching between use cases.

BGE-M3#

BGE (BAAI General Embedding) models are a family of text embedding models developed by the Beijing Academy of Artificial Intelligence (BAAI). One of the most popular versions in the series is BGE-M3. It stands out due to its versatility in multi-functionality, multi-linguality, and multi-granularity capabilities, also known as M3.

Why choose it:

- Multi-functionality: BGE-M3 can simultaneously perform the three common retrieval functionalities of embedding model: dense, multi-vector, and sparse retrieval.

- Multi-linguality: BGE-M3 supports more than 100 working languages. It learns a common semantic space for different languages. This enables both multilingual retrieval within each language and crosslingual retrieval between different languages.

- Multi-granularity: BGE-M3 is able to process inputs of different granularities, from short sentences to long documents of up to 8192 tokens.

Points to be cautious about:

- Generalizability needs further testing: While BGE-M3 performs well on benchmark datasets, the researchers think they need more tests to confirm its effectiveness across real-world datasets.

- Computational demand for long documents: Although BGE-M3 handles inputs up to 8192 tokens, processing very lengthy documents may pose challenges in terms of computational resources and efficiency.

- Performance across languages: The researchers claim multi-lingual support, but they also admit there may be potential variations in performance across different language families and linguistic features.

BGE-M3 is just one part of the broader BGE family. If you're looking for English-only alternatives, you may want to explore bge-base-en-v1.5 or bge-en-icl.

all-mpnet-base-v2#

MPNet is a novel pre-training method for language understanding tasks. It addresses limitations of masked language modeling (MLM) in BERT and permuted language modeling (PLM) in XLNet. In the MPNet family, all-mpnet-base-v2 is one of the most popular embedding models. It is designed specifically for sentence and short paragraph encoding. The original developers use a contrastive learning objective: given a sentence from a paired dataset, the model predicts which of a set of randomly sampled sentences is the correct pair.

all-mpnet-base-v2 is also a sentence-transformers model. It is able to map sentences and paragraphs into a 768-dimensional dense vector space, ideal for clustering, semantic search, and other NLP tasks.

To date, all-mpnet-base-v2 represents one of the most downloaded embedding models on Hugging Face.

Why choose it:

- Extensive training: The model is trained on over 1 billion sentence pairs to help it capture fine-grained semantic relationships.

- Fine-tuning: The model is very adaptable and can be further fine-tuned to optimize performance for specific tasks. As of this writing, it has 149 fine-tuned versions on Hugging Face.

- Flexible licensing: The model is released under the Apache 2.0 license. This means it supports both personal and commercial use in accordance with the license terms.

Points to be cautions about:

- Input length limitations: By default, the model truncates inputs longer than 384 word pieces, which may lead to a loss of context in longer text.

- Moderate performance: Compared to other models of similar size, all-mpnet-base-v2 may not perform as well on certain tasks. It does not rank particularly high on the MTEB leaderboard across a range of benchmarks.

If you want to try all-mpnet-base-v2, I also recommend all-MiniLM-L6-v2. Both are sentence-transformers models and easy to set up. For those new to AI models, they make an excellent starting point for exploring embeddings.

gte-multilingual-base#

gte-multilingual-base is the latest model in Alibaba Group’s GTE (General Text Embedding) family. It stands out for its strong performance in multilingual retrieval tasks and comprehensive representation evaluations. With 305 million parameters, this model balances high-quality embeddings with efficient resource usage.

Why choose it:

- Multilingual support: The model covers more than 70 languages, delivering reliable multilingual performance.

- Elastic dense embedding: gte-multilingual-base supports elastic output dense representations, optimizing storage and improving efficiency in downstream tasks.

- Encoder architecture: Built on an encoder-only transformer architecture, gte-multilingual-base is smaller and more resource-efficient than decode-only models like gte-qwen2-1.5b-instruct. It delivers a 10x increase in inference speed.

- Sparse vectors: In addition to dense representations, it can also generate sparse vectors.

Points to be cautious about:

- Inconsistent performance across languages: In the paper, the researchers note that performance may vary for certain languages. This is likely due to the limited language data during contrastive pre-training, which may affect the performance for these languages.

Other recommended gte models:

- gte-Qwen2-7B-instruct: A top-ranking model on the MTEB leaderboard

- gte-large-en-v1.5: A model optimized for English, with a max sequence length of 8192

Nomic Embed Text V2#

Nomic Embed Text V2 is a multilingual embedding model from Nomic AI and the first to use a Mixture-of-Experts (MoE) architecture for text embeddings. Trained on 1.6 billion contrastive pairs across ~100 languages (from mC4 and multilingual CC News), it delivers high-quality embeddings for tasks like semantic search, RAG, and recommendations.

Why choose it:

- MoE design: Unlike dense models, this architecture dynamically routes input to only a subset of its total parameters. Nomic AI researchers found alternating MoE layers with 8 experts and top-2 routing delivers the optimal balance between performance and efficiency (activate only 305M out of 475M in total during inference).

- High performance: The model shows strong performance on BEIR and MIRACL benchmarks, competitive even with models twice its size.

- Flexible dimension: Leveraging Matryoshka representation learning, it supports truncation from 768 to 256 while maintaining embedding quality. This is ideal for resource-constrained deployments.

- Open-source: Pre-training and fine-tuning datasets, training code, and model weights are all open-sourced.

Points to be cautious about:

- Prompt formatting: For optimal performance, use structured input formats like

"search_document: <text>"or"search_query: <text>". - Input length: The model supports a maximum input length of 512 tokens.

- Language variability: Performance may vary across different languages, especially low-resource ones.

What are the common use cases of embedding models?#

Embedding models are important tools that convert text, images, or other data into vector representations, capturing their underlying semantics and structure. This makes them essential in a wide range of AI applications. To name a few:

- Semantic search: Embeddings allow you to retrieve semantically similar items by encoding content (text, images, etc.) into vector space, where similar items are close to each other. In search engines, this enables users to easily find relevant content.

- Information retrieval: Embeddings enable AI models to search through large databases for documents or responses relevant to a given query. A typical use case is RAG, where retrieved data helps improve real-time content generation.

- Clustering and classification: By grouping similar data points in vector space, embeddings make it easy to classify and organize content. For example, you can group customer reviews by sentiment or documents by topic.

- Recommendation systems: Embeddings help recommendation engines understand user preferences based on the semantic similarities between user interests. This makes it possible to provide more personalized recommendations.

What should I consider when deploying embedding models?#

When deploying embedding models, consider these key factors:

- Performance and accuracy: Choose a model suited to your specific tasks, like retrieval, clustering, or classification. Review benchmarks like MTEB to ensure the model meets the desired accuracy and performance for your use case.

- Low latency and fast scaling. Real-time applications, such as search engines or chatbots, require fast, low-latency embeddings. If you have diverse traffic patterns, fast autoscaling (especially fast cold start time) is also important. BentoML provides standardized abstractions to build scalable APIs and lets you run any embedding model on BentoCloud. This inference platform provides fast and scalable infrastructure for model inference and advanced AI applications.

- Integration for complicated AI systems: Embedding models can be powerful components within compound AI solutions. A simple example is combining an embedding model with an LLM for a RAG system. BentoML offers a suite of toolkits that simplify building and scaling such AI systems, including multi-model chains, distributed orchestration, and multi-GPU serving.

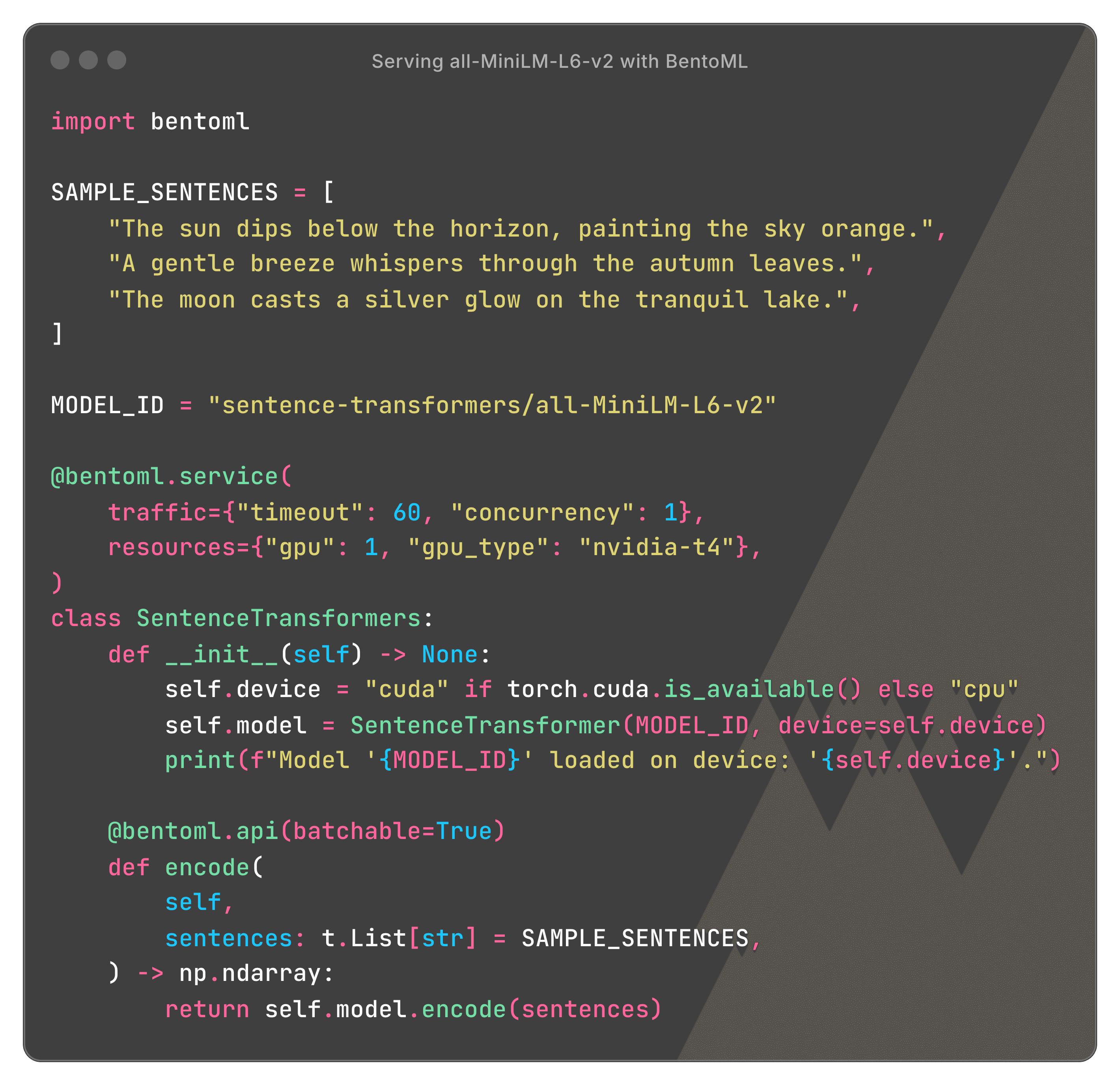

Here is a code example of serving all-MiniLM-L6-v2 with BentoML:

Deploy all-MiniLM-L6-v2Deploy all-MiniLM-L6-v2

How can I improve the quality of embeddings?#

Improving the quality of embeddings can enhance the performance of tasks like search, classification, and clustering. Common strategies include:

- Fine-tune on domain-specific data. Start with fine-tuning your embedding model on data that closely resembles your target domain. This can greatly improve relevance and semantic accuracy. It is particularly effective for specialized industries like legal, medical, or e-commerce.

- Use contrastive learning. This is probably one of the most mentioned terms when you hear somebody talking about embedding models. It simply means to train embedding models by learning to differentiate between similar (positive) and dissimilar (negative) pairs of samples. This approach helps the model better capture subtle semantic differences.

- Experiment with different embedding dimensions. Different dimensions can impact both quality and resource usage. Lower dimensions may simplify and speed up computations but could lose detail, while higher dimensions often capture richer information at the cost of more storage.

- Use multimodal embedding training: For applications that require text, images, or other data types, I highly recommend you train the model with multimodal data. This can improve embedding quality by enabling the model to capture cross-modal relationships.

Final thoughts#

It is never easy to choose the right embedding model with so many options in the market. I hope this guide has provided clarity on some of the top open-source embedding models. Actually, for each model listed, there are often many great variants worth exploring. My best advice here is to take advantage of the flexibility of open-source models by fine-tuning them with your own data. This can significantly improve embedding accuracy for your specific needs. And finally, remember that choosing the right deployment tool is just as crucial. It can make all the difference in achieving smooth, scalable, and efficient performance.

If you have questions about embedding models, check out the following resources:

- [Example] Serving a Sentence Transformers model with BentoML

- [Example] Serving CLIP with BentoML

- Choose the right NVIDIA or AMD GPUs for your model

- Choose the right deployment patterns: BYOC, multi-cloud and cross-region, on-prem and hybrid

- Sign up for BentoCloud for free to deploy your first embedding model

- Join our Slack community

- Contact us if you have any questions of deploying embedding models