Deploying an OCR Model with EasyOCR and BentoML

Authors

Last Updated

Share

⚠️ Outdated content#

Note: The content in this blog post may not be applicable now. Please refer to the BentoML documentation for the latest information.

Optical Character Recognition (OCR) involves training models to recognize and convert visual representations of text into a digital format. Recent advances in deep learning have greatly improved the accuracy and capabilities of OCR systems, enabling a wide range of applications. In this blog post, I will demonstrate how to build a simple OCR application with BentoML and EasyOCR.

Note: EasyOCR is a python module for extracting text from images. It can read both natural scene text and dense text in documents, with over supported 80+ languages.

Before you begin#

Let’s create a virtual environment first for dependency isolation.

python -m venv venv source venv/bin/activate

Then, install the required dependencies.

pip install bentoml easyocr

Load and save the reader model#

Create a download_model.py file as below.

import easyocr import bentoml reader = easyocr.Reader(['en']) bento_model = bentoml.easyocr.save_model('en-reader', reader)

This file uses easyocr.Reader to create a reader instance and loads it into memory. I am using English as the language here while you can choose a preferred language from EasyOCR’s language list. The save_model method registers the model into BentoML’s local Model Store. In addition to the model name (en-reader) and the model instance (reader), you can also add additional information of the model, such as labels and metadata.

bentoml.easyocr.save_model( 'en-reader', reader, labels={ "type": "ocr", "stage": "dev", }, metadata={ "data_version": "20230816", } )

Run this script and the model should be saved to the Model Store.

python3 download_model.py

View all the models in the Store.

$ bentoml models list Tag Module Size Creation Time en-reader:vjcnlxr3j6gvanry bentoml.easyocr 93.87 MiB 2023-08-15 17:39:44 vit-extractor-pneumonia:vnthazb3doxkwnry bentoml.transformers 996.00 B 2023-08-15 11:27:32 vit-model-pneumonia:vjevqsr3doxkwnry bentoml.transformers 327.37 MiB 2023-08-15 11:27:30 summarization:phsyikr2okkgonry bentoml.transformers 1.14 GiB 2023-08-14 15:16:24 zero-shot-classification-pipeline:zrakh6bvcobuunry bentoml.transformers 852.36 MiB 2023-08-07 19:16:04 summarization-pipeline:s7altrbvcobuunry bentoml.transformers 1.14 GiB 2023-08-07 19:14:36

Create a BentoML Service#

Create a BentoML Service (by convention, service.py) with an API endpoint to expose the model externally.

import bentoml import PIL.Image import numpy as np runner = bentoml.easyocr.get("en-reader:latest").to_runner() svc = bentoml.Service("ocr", runners=[runner]) @svc.api(input=bentoml.io.Image(), output=bentoml.io.Text()) async def transcript_text(input: PIL.Image.Image) -> str: results = await runner.readtext.async_run(np.asarray(input)) texts = '\n'.join([item[1] for item in results]) return texts

Let’s look at the file in more detail.

The bentoml.easyocr.get method retrieves the model from the Model Store. Alternatively, you can also use the bentoml.models.get method for the same purpose. Note that BentoML provides framework-specific get methods for each framework module. The difference between them and bentoml.models.get is that the former ones verify if the model found matches the specified framework.

With the retrieved model, to_runner creates a Runner instance. In BentoML, Runners are units of computation in BentoML. As BentoML uses a microservices architecture to serve AI applications, Runners allow you to combine different models, scale them independently, and even assign different resources (e.g. CPU and GPU) to them. bentoml.Service then creates a Service with the Runner wrapped in it.

Lastly, use a decorator for the transcript_text function to define an API endpoint. The function expects an image input and returns plain text (a NumPy array). More specifically, the model returns the following information for a given image.

- A list of coordinates indicating the bounding box of the detected text.

- The detected text.

- Confidence scores.

I only need the detected text so my code here only extracts it (which is at index 1 in each item of the results) and joins them with newline characters to create a single string of all detected texts.

Start the Service. Add the --reload flag to reload the Service when code changes are detected.

$ bentoml serve service:svc --reload 2023-08-16T12:00:07+0800 [INFO] [cli] Environ for worker 0: set CPU thread count to 12 2023-08-16T12:00:07+0800 [INFO] [cli] Prometheus metrics for HTTP BentoServer from "service:svc" can be accessed at <http://localhost:3000/metrics>. 2023-08-16T12:00:08+0800 [INFO] [cli] Starting production HTTP BentoServer from "service:svc" listening on <http://0.0.0.0:3000> (Press CTRL+C to quit)

Interact with the Service by visiting http://0.0.0.0:3000 or send a request via curl. Replace bentoml-ocr.png in the following example with your own file.

curl -X 'POST' \ '<http://0.0.0.0:3000/transcript_text>' \ -H 'accept: text/plain' \ -H 'Content-Type: image/png' \ --data-binary '@bentoml-ocr.png'

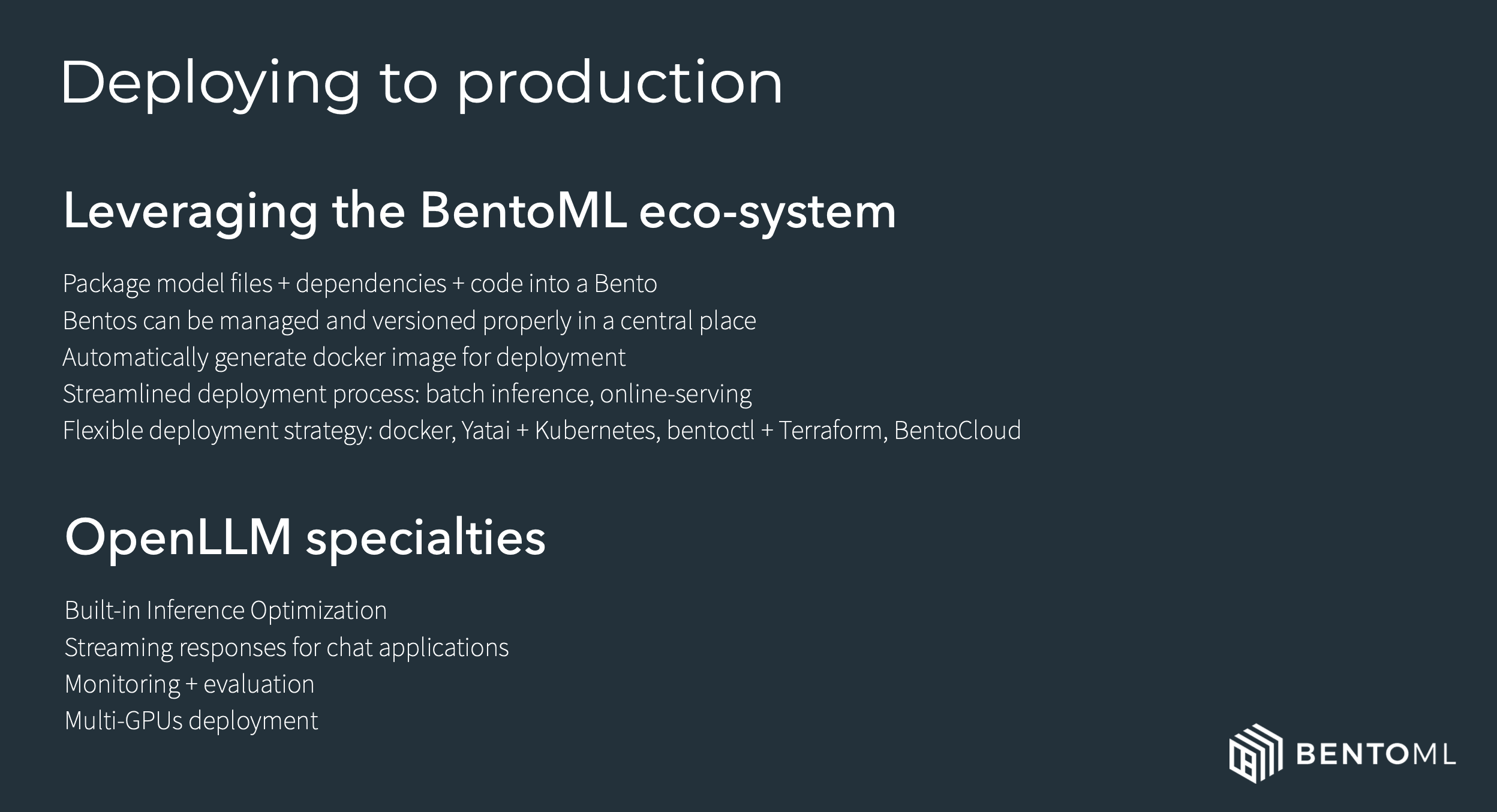

This is the image used in the command:

The output by the model:

Deploying to production Leveraging the BentoML eco-system Package model files + dependencies + code into a Bento Bentos can be managed and versioned properly in a central place Automatically generate docker image for deployment Streamlined deployment process: batch inference, online-serving Flexible deployment strategy: docker; Yatai + Kubernetes; bentoctl + Terraform, BentoCloud OpenLLM specialties Built-in Inference Optimization Streaming responses for chat applications Monitoring + evaluation Multi-GPUs deployment BENTOML

The result is not perfect as the “+” icons are rendered in separate lines but the model has detected most of the text. As I mentioned above, the model’s output also includes the bounding box and confidence scores. You can customize the code as needed to change the output format.

Package the model#

Once the BentoML Service is ready, package the model into a Bento, the standardized distribution format in BentoML. To create a Bento, define a bentofile.yaml file as below. See Bento build options to learn more.

service: 'service:svc' include: - '*.py' python: packages: - easyocr models: - en-reader:latest

Run bentoml build in your project directory to build the Bento.

$ bentoml build ██████╗ ███████╗███╗ ██╗████████╗ ██████╗ ███╗ ███╗██╗ ██╔══██╗██╔════╝████╗ ██║╚══██╔══╝██╔═══██╗████╗ ████║██║ ██████╔╝█████╗ ██╔██╗ ██║ ██║ ██║ ██║██╔████╔██║██║ ██╔══██╗██╔══╝ ██║╚██╗██║ ██║ ██║ ██║██║╚██╔╝██║██║ ██████╔╝███████╗██║ ╚████║ ██║ ╚██████╔╝██║ ╚═╝ ██║███████╗ ╚═════╝ ╚══════╝╚═╝ ╚═══╝ ╚═╝ ╚═════╝ ╚═╝ ╚═╝╚══════╝ Successfully built Bento(tag="ocr:ib3cjvb36kf4mnry"). Possible next steps: * Containerize your Bento with `bentoml containerize`: $ bentoml containerize ocr:ib3cjvb36kf4mnry [or bentoml build --containerize] * Push to BentoCloud with `bentoml push`: $ bentoml push ocr:ib3cjvb36kf4mnry [or bentoml build --push]

View all available Bentos:

$ bentoml list Tag Size Creation Time ocr:ib3cjvb36kf4mnry 106.00 MiB 2023-08-16 13:11:47 summarization:bkutamr2osncanry 13.62 KiB 2023-08-14 15:27:36 yolo_v5_demo:hen2gzrrbckwgnry 14.17 MiB 2023-08-02 15:43:09 iris_classifier:awln3pbmlcmlonry 78.84 MiB 2023-07-27 16:38:42

Containerize the Bento with Docker:

bentoml containerize ocr:ib3cjvb36kf4mnry

The newly-created image has the same tag as the Bento:

$ docker images REPOSITORY TAG IMAGE ID CREATED SIZE ocr ib3cjvb36kf4mnry 143b4fbf6858 7 seconds ago 1.5GB iris_classifier awln3pbmlcmlonry ffb253bcd646 2 weeks ago 906MB summarization ulnyfbq66gagsnry da287141ef3e 5 weeks ago 2.43GB

With the Docker image, you can deploy it to any Docker-compatible environments like Kubernetes. Alternatively, push the image to BentoCloud, a serverless platform in the BentoML ecosystem that allows you to run and scale AI applications.

Summary#

We’ve traversed the journey of building and deploying an OCR model using EasyOCR and BentoML, starting from setting up the environment to packaging the model into a Docker container. As always, the success of an OCR model is dependent not only on the technology but also on the quality and diversity of the training data. The results can always be enhanced with more specialized training, tweaking parameters, or integrating post-processing steps. The path is rich with possibilities, and I encourage you to experiment, iterate, and share their findings with BentoML.

Happy coding ⌨️, and until next time!