Function Calling with Open-Source LLMs

Authors

Last Updated

Share

Today, we're witnessing a shift toward LLMs becoming integral parts of compound AI systems. In this new paradigm, LLMs are no longer standalone models. Instead, they are embedded in larger, more complex architectures that require them to connect with external APIs, databases, and custom functions. One of the most significant advancements in this area is function calling.

In this blog post, we’ll explore function calling, with a special focus on open-source LLMs. This post is also the first in our blog series on function calling with open-source models, where we will cover:

- An overview of function calling with open-source models.

- A detailed walkthrough of deploying function calling systems using BentoML, with Llama 3.1 as an example.

- Performance optimization and scaling strategies for open-source LLMs in function calling.

- A review of the best open-source models for function calling.

What is function calling?#

First, it's crucial to understand that function calling doesn't fundamentally change how LLMs operate. At their core, these models still function as transformers that predict the next word in a sequence. What changes is that this approach adds a structured mechanism for interacting with external tools.

Function calling is essentially a structured way of "prompting" an LLM with detailed function definitions and explanations, which the LLM then uses to generate appropriate outputs. These outputs can be used to interact with external "functions", and often times the results can be fed back into the LLM for multi-turn conversations.

Here's a simple example of how a function definition might look:

{ "name": "get_delivery_date", "description": "Get the delivery date for a customer's order. Call this whenever you need to know the delivery date, for example when a customer asks 'Where is my package'", "parameters": { "type": "object", "properties": { "order_id": { "type": "string", "description": "The customer's order ID.", }, }, "required": ["order_id"], "additionalProperties": false, } }

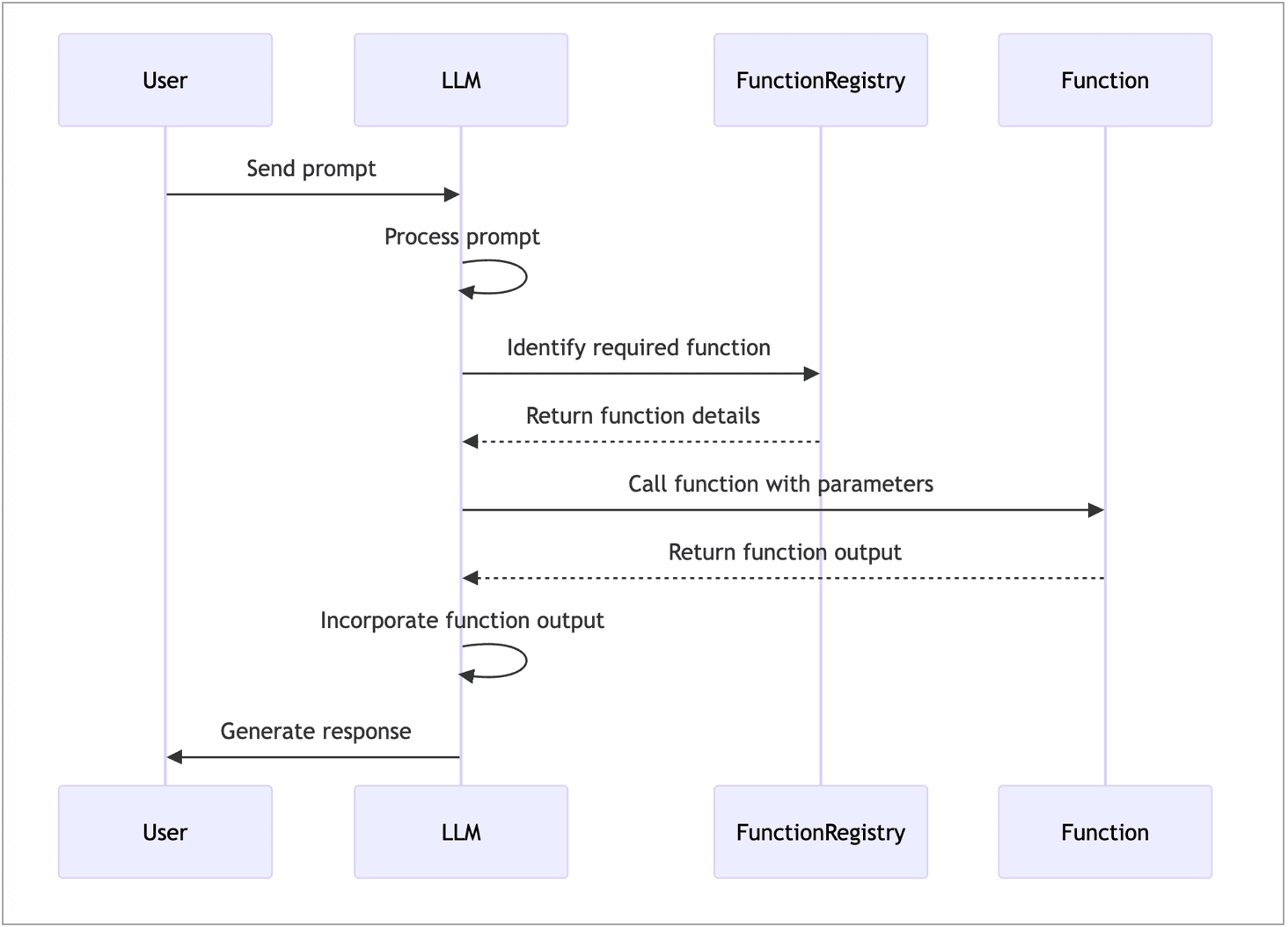

Here is a high level workflow diagram of function calling:

Why should you use function calling?#

Function calling expands the capabilities of LLMs, giving them access to up-to-date or proprietary knowledge and allowing them to interact with other systems. The key benefits include:

-

Integrating with external data sources. LLMs can query databases, access APIs, or retrieve information from proprietary knowledge bases. For example, when users ask for the latest exchange rate between USD and CAD, the LLM can fetch real-time data from an API.

-

Taking actions via API calls or user-defined code. LLMs can trigger actions in other systems and run specialized tasks or models. For example:

- An LLM analyzes a sales call transcript and updates a CRM system with a call summary.

- ChatGPT calls DALL-E 3 for image generation based on text descriptions.

What are the use cases of function calling?#

The primary use cases of function calling fall into two major categories.

Agents#

Function calling allows you to create intelligent AI agents capable of interacting with external systems and performing complex tasks:

- Fetch data: AI agents can perform tasks such as web searches, fetching data from APIs, or browsing the internet to provide real-time information. For example, ChatGPT can retrieve the latest news or weather updates by invoking external services.

- Execute complex workflows: By leveraging function calling, agents can execute complex workflows that involve multiple, parallel, or sequential tasks. For example, an agent might book a flight, update a calendar, and send a confirmation email, all within a single multi-turn interaction.

- Implement agentic RAG: Function calling makes it possible to dynamically generate SQL queries or API calls, which means LLMs can fetch relevant information from databases or knowledge repositories.

Data extraction#

Function calling requires the LLM used to execute the function call in an exact format defined by the function’s signature and description. For example, JSON is often used as the representation for the function call, making function calling an effective tool for extracting structured data from unstructured data.

For example, an LLM with function-calling capabilities can process large volumes of medical records, extracting key information such as patient names, diagnoses, prescribed medications, and treatment plans. By converting this unstructured data into structured JSON objects, healthcare providers can easily parse the information for further analysis, reporting, or integration into EHR systems.

Open-source vs. proprietary models for function calling#

Proprietary LLMs like GPT-4 come with built-in support for function calling, making integration more straightforward. By contrast, when using open-source models like Mistral or Llama 3.1, the process can be more complex and require additional customization.

So, why choose open-source models for function calling?

- Data security and privacy. Many enterprises prioritize keeping sensitive data and processes in-house. Open-source LLMs provide greater control over data handling and privacy.

- Proprietary integration. Many function calling use cases involve accessing proprietary systems, data sources, or knowledge bases. For example, an LLM agent responsible for automating a business-critical process may need access to sensitive, organization-specific workflows and data. Private deployment with open-source models can keep proprietary information secure and private.

- Customization. Open-source models can be fine-tuned to adapt to specific formats or domain-specific languages (DSLs), often using proprietary and sensitive training data.

Challenges in function calling with open-source LLMs#

While open-source LLMs offer advantages in terms of flexibility and customization, you may find it difficult to implement function calling with these models given the following challenges:

Constrained output#

One of the primary challenges is getting open-source LLMs to produce outputs in certain formats for function calling, such as:

-

Structured outputs. Function calling often relies on well-structured data formats like JSON or YAML that match the expected parameters of the function being called. This is vital to making function calling reliable, especially when the LLM is integrated into automated workflows. However, open-source LLMs can sometimes deviate from the instructions and produce outputs that are not properly formatted or contain unnecessary information.

Several tools and techniques have been developed to address this challenge, such as Outlines, Instructor and Jsonformer. Here is an example of using Pydantic to define JSON schema and use it in the OpenAI client.

from pydantic import BaseModel from openai import OpenAI base_url = "https://bentovllm-llama-3-1-70-b-instruct-awq-service-0nek.realchar-guc1.bentoml.ai/v1" model = "hugging-quants/Meta-Llama-3.1-70B-Instruct-AWQ-INT4" client = OpenAI(base_url=base_url, api_key="n/a") class CalendarEvent(BaseModel): name: str date: str participants: list[str] if __name__ == "__main__": completion = client.beta.chat.completions.parse( model=model, messages=[ {"role": "system", "content": "Extract the event information."}, {"role": "user", "content": "Alice and Bob are going to a science fair on Friday."}, ], response_format=CalendarEvent, ) event = completion.choices[0].message.parsed print(type(event), event, event.model_dump()) -

Specialized formats. Certain tasks require LLMs to generate more specialized outputs, such as SQL queries or DSLs. This is often implemented using grammar mode or regex mode, which restrict the LLM's output to match a predefined format. Here’s an example of how you can use Outlines to constrain the output of an LLM using regex to match a specific pattern, in this case, an IP address format.

from outlines import models, generate model = models.transformers("microsoft/Phi-3-mini-128k-instruct") regex_str = r"((25[0-5]|2[0-4]\d|[01]?\d\d?)\.){3}(25[0-5]|2[0-4]\d|[01]?\d\d?)" generator = generate.regex(model, regex_str) result = generator("What is the IP address of localhost?\nIP: ") print(result) # 127.0.0.100

LLM capabilities#

Different open-source LLMs have varied levels of capabilities and limitations when it comes to supporting multiple types of function calls, such as single, parallel, and nested (sequential) function calls.

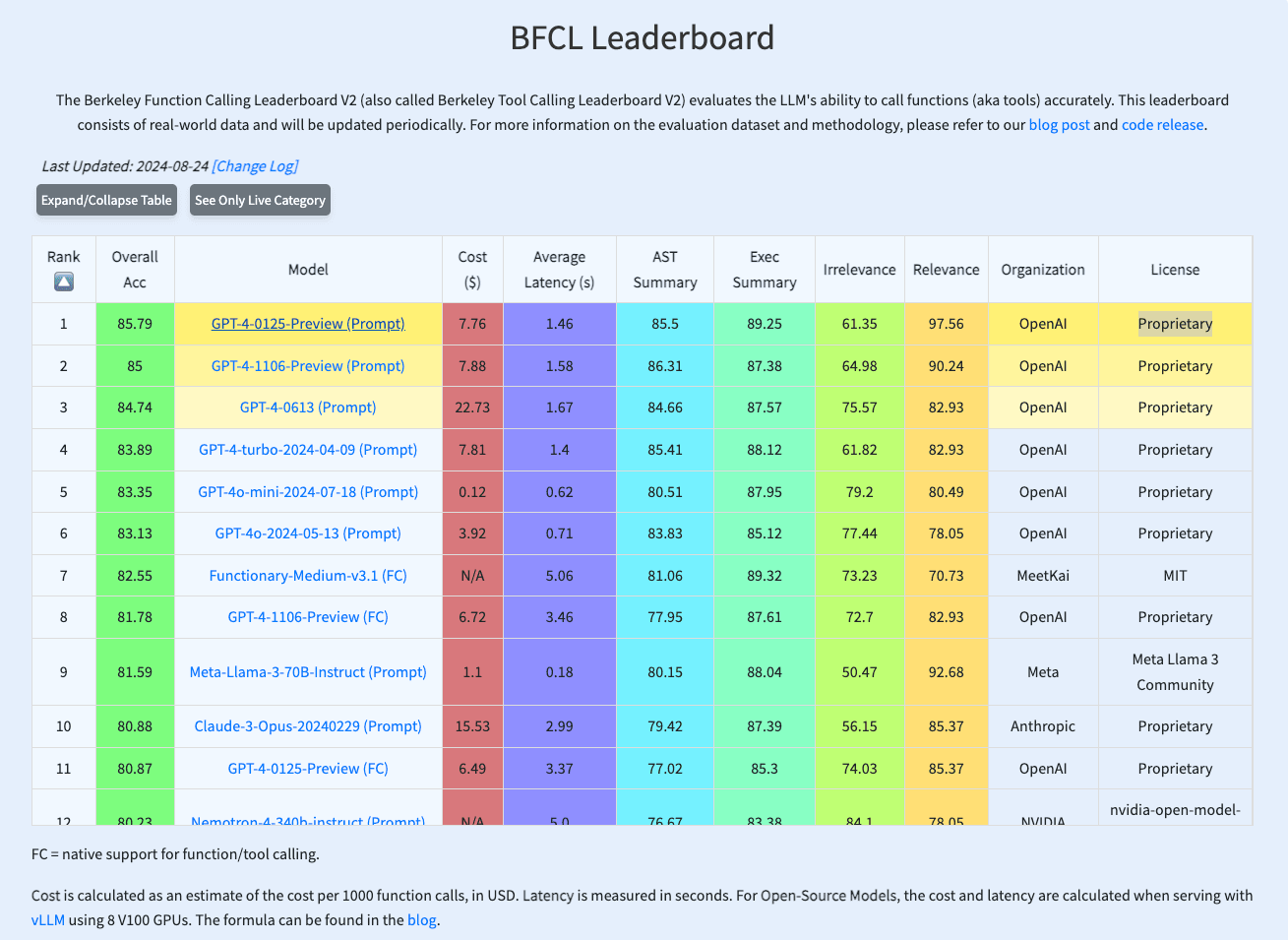

For a comprehensive comparison of LLM function calling capabilities, check out the Berkeley Function Calling Leaderboard.

Building and scaling compound AI systems#

An LLM application with function calling typically includes multiple components, such as model inference, custom business logic, user-defined functions, and external API integrations. Orchestrating these components in a production environment can be complex and challenging.

At BentoML, we are working to provide the complete platform for enterprise AI teams to build and scale compound AI systems. It seamlessly brings cutting-edge AI infrastructure into your cloud environment, enabling AI teams to run inference with unparalleled efficiency, rapidly iterate on system design, and effortlessly scale in production with full observability.

Conclusion#

Function calling transforms LLMs into dynamic tools capable of interacting with real-time data and executing complex workflows. While proprietary models like GPT-4 offer built-in function calling, open-source LLMs provide enterprises with greater flexibility, privacy, and customization options. Stay tuned for our next post, where we'll dive deep into code examples of implementing function calling with open-source LLMs using BentoML!

Check out the following resources to learn more:

- LLM structured outputs

- Function calling

- Read our LLM Inference Handbook

- Sign up for BentoCloud to deploy your first function calling system

- Join our Slack community

- Contact us if you have any questions about building and scaling compound AI systems