Introducing Bentoctl: Model Deployment Anywhere

Jun 28, 2022 • Bozhao Yu

TL;DR: bentoctl, the newest offering from BentoML, lets you deploy your ML models to any cloud platform with ease.

BentoML was founded to provide data scientists with a practical framework for packaging ML models and feature extraction code into a high-performance ML service. Today, our open source framework is used by hundreds of organizations around the world, powering hundreds of millions of requests a day.

But we didn’t just stop there. ML teams ultimately need their prediction services deployed to production. The final step of getting these models into a live environment is key for businesses to make predictions and ultimately get value from the model.

Conventional wisdom says to deploy your prediction service with your company’s existing development pipelines, and this has become a best practice in MLOps for several reasons: it decreases the learning curve, it’s easier to maintain, and it gives teams a scalable way to deploy more services more frequently.

Easier said than done though, right? Introducing Bentoctl.

What Is Bentoctl?#

Bentoctl, the newest offering from BentoML, helps facilitate the handoff of an ML model from data scientists to DevOps engineers by providing an easy way to integrate the service with existing deployment pipelines.

Why Did We Build Bentoctl?#

If you’re familiar with BentoML, you may know that our first iteration of Bentoctl provided a single command to help deploy your Bentos (What’s a Bento?) to different cloud services. Over time, we noticed that cloud deployments were growing more complex and involving more stakeholders, namely DevOps teams. Justifiably, DevOps teams wanted to incorporate their own existing resources and patterns when shipping ML models to production. Our first iteration of Bentoctl, while simple and convenient, wasn’t fully meeting these new requirements we were hearing about from DevOps.

With Bentoctl, we had the opportunity to rethink the ideal MLOps integration with existing pipelines. Instead of complex collaborations resulting in boutique solutions, with Bentoctl, we create separation between the concerns of different stakeholders and allow them to use tools they’re familiar with to customize solutions as they see fit.

The outcome: Data scientists are able to ship their best models in the BentoML standard and DevOps teams can use Terraform scripts to easily integrate with production pipelines and infrastructure.

Everybody wins!

How It Works#

Bentoctl prepares your Bento for deployment into any compute environment. Using the cloud service’s specification, Bentoctl generates the necessary Terraform scripts to automate model deployment into any cloud environment (not just Kubernetes!).

Today, we support:

• Lambda

• ECS

• EC2

• Heroku

Of course, if you want to deploy into Kubernetes, we’ve got Yatai for that. ;)

Deployment: No Customization Necessary#

The requirements for each cloud environment can vary dramatically, which can make model deployment intimidating and challenging if you don’t understand the nuances of each cloud environment. For example, Sagemaker requires very specific endpoints to be configured in order to deploy a service.

Bentoctl leverages BentoML’s Bento format (that provides a standard layout and configuration for prediction services) to automatically rebuild the Bento into the style that fits the particular cloud’s requirements.

What this means is that users don't have to change how they develop and build their model server to deploy it to a particular service. More importantly, they don’t have to understand the nuances of the cloud that they are deploying into, eliminating blockers and learning curves prior to getting models deployed.

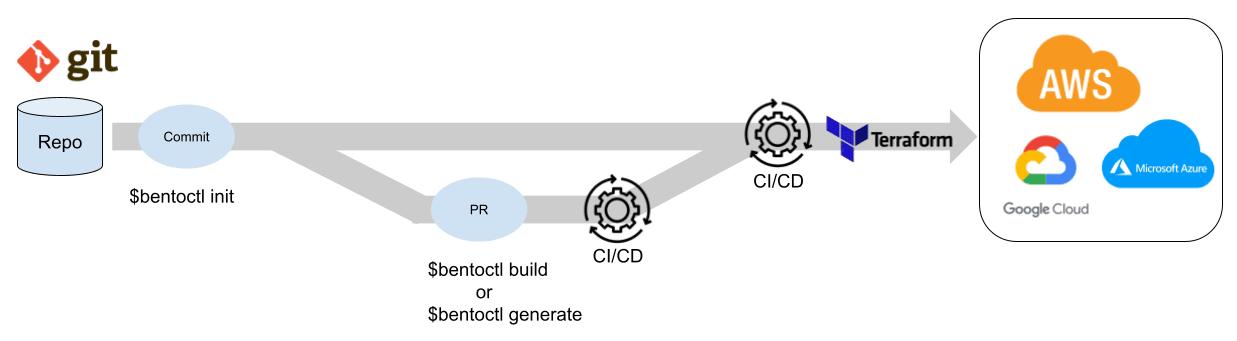

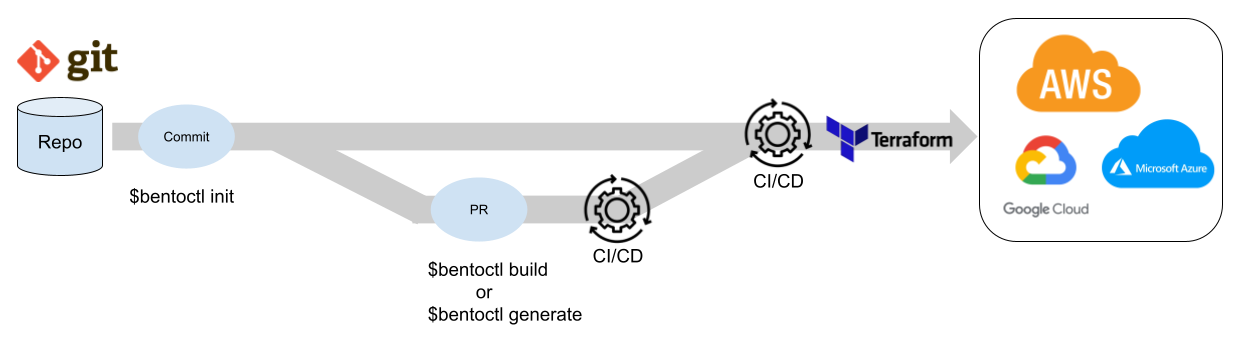

Built For GitOps Workflows#

Bentoctl was designed with the increasingly popular GitOps workflow in mind. We see a lot of teams adopting this workflow in their automated deployment pipelines.

At its core, the GitOps framework has three core components:

1. Infrastructure as Code (IaC)

2. Pull requests (PR)

3. Continuous integration and continuous deployment (CI/CD)

By separating the generation of the deployable artifact and the infrastructure configuration, DevOps teams can now more easily fit Bento services into their existing pipelines.

How Do Teams Put Bentoctl Into Their GitOps Practice?#

Because of its popularity as the most widely used IaC product in the market, Terraform is used to provision resources.

Generating the scaffolding

Getting started with Bentoctl is as easy as running the command `bentoctl init`. This will kick off an interactive process that generates the standard configuration and necessary Terraform project files. It’s important to understand that these Terraform files are deployable out-of-the-box to create a new prediction service. However, they are also easily customizable given that they are standard Terraform scripts. All of these files can be checked into a Git repo as Terraform recommends.

Building the image

Once you’ve generated your Terraform scaffolding, the `bentoctl build` command packages your prediction service according to the location you’re deploying to. For example, Lambda has very nuanced requirements to deploy. Bentoctl automatically follows those specifications when building the deployable service. This step automatically updates the Terraform’s variable file with the newly-built image information.

Additional configuration

If you ever need to make adjustments to deployment resources, you can easily update the Bentoctl configuration file and run `bentoctl generate`. Bentoctl will automatically make the necessary updates to a variables file that’s used as an input to the main Terraform deployment script. While Bentoctl has a number of variables you can configure out-of-the-box — like region, memory size, and timeout — DevOps engineers are also able to fully override and customize the Terraform deployment files. Any change in these configuration files can easily be committed in a PR that lets your team collaborate and review. With the standard Terraform project structure, you are able to use any popular CI/CD tool to deploy to any Terraform provider.

Try Bentoctl Out Today#

We released Bentoctl to early beta testers, who found the tool both flexible enough to integrate with existing CI/CD pipelines, as well as powerful enough for deploying into many different environments.

Today, we’re releasing our v1 as an open source project, and can’t wait to see what you build with it!

To learn more, visit Bentoctl’s GitHub page.

Got more model serving related questions?Join our growing Slack community of ML practitioners today.