Keras Models + BentoML + AWS EKS: A Simple Guide

Last Updated

Share

Written By Bujar Bakiu. Originally posted on Medium.

BentoML is currently one of the hottest frameworks for serving, managing and deploying machine learning models. If you are reading this blog, I guess you have heard of it and have some interest in checking it out. So was I, and that’s why I did this small experiment.

One of the simplest exercises in ML is the classification of pets: is it a dog or a cat, based on an image provided. Personally, I never did that exercise but I was intrigued by it. Serving it with BentoML would make it even more challenging. Here is the Github link to my repository.

A few reasons about the technologies I have chosen to serve these models:

- Keras —As I am not an expert in creating models and defining layers in CNN (a nice way of saying I don’t know what is going on there), I found an interesting project here by @Andrej Baranovskij and decided to transform it from a Jupyter Notebook, into a pure Python application. Keras was used in the project, and that’s why I decided to go with it too. AFAIK there are a lot of FastAI examples out there too, and in the near future I plan to give it a shot too.

- BentoML — As mentioned in the very beginning, it is one of the hottest trends now in the ML world and I wanted to get some hands-on experience at it.

- AWS EKS — In the last year I have been playing and using AWS quite a bit, therefore it was a nice option to use AWS and specifically EKS (also a bit of ECR).

This post is about describing how to serve a model in AWS EKS with BentoML.

Dataset#

For the data set used in this post is from Kaggle dataset found in the official Microsoft Download Center. Link: https://www.microsoft.com/en-us/download/details.aspx?id=54765.

Model#

The model is a Convolutional Neural Network (CNN, or ConvNet) with many layers (Convolution, Pooling, Flatten, Dropout and Dense layers). For this post, the model was borrowed from another source. The accuracy of the model is reasonably good compared to the dataset size used for training. The training is done on a dataset of 1000 pictures of dogs and cats each. Testing and validation is done on a dataset of 500 pictures per set per category. The dataset provided contains almost 12.5k pictures per each category so the training set size can be increased. So would the time required for training the model, therefore keeping training dataset size at 1000 seems reasonable.

Preparation Work#

While playing around in the beginning with the dataset and the model two issues came up:

1. The dataset has some corrupted images.

2. The script for creating the test, train and validation datasets was not very flexible and would always generate the same datasets.

Manually fixing them proved to be not very efficient. An automated solution was required as it would make the solution replicable and hopefully help other people who might run in similar issues. Therefore an automated solution would be worth it.

A contributor in Github had already solved the verification of the images issue in a very accurate way. Unfortunately trying to find the contributor again proved to be not so easy. Different versions of the same solution would come up while trying to find it, so it is safe to assume by now, it is a public property. To everyone who contributed to that script: Thank you!

Second issue, as the script for creating the images in different datasets was based on the index of the image (used as the file name also), after clean up, the script was broken. To improve on that, I refactored the script to only use file names to generate the datasets. This way every time a new dataset is generated, it won’t be the same as the previous one, and the model might produce better, or in the unlucky case, or worse predictions.

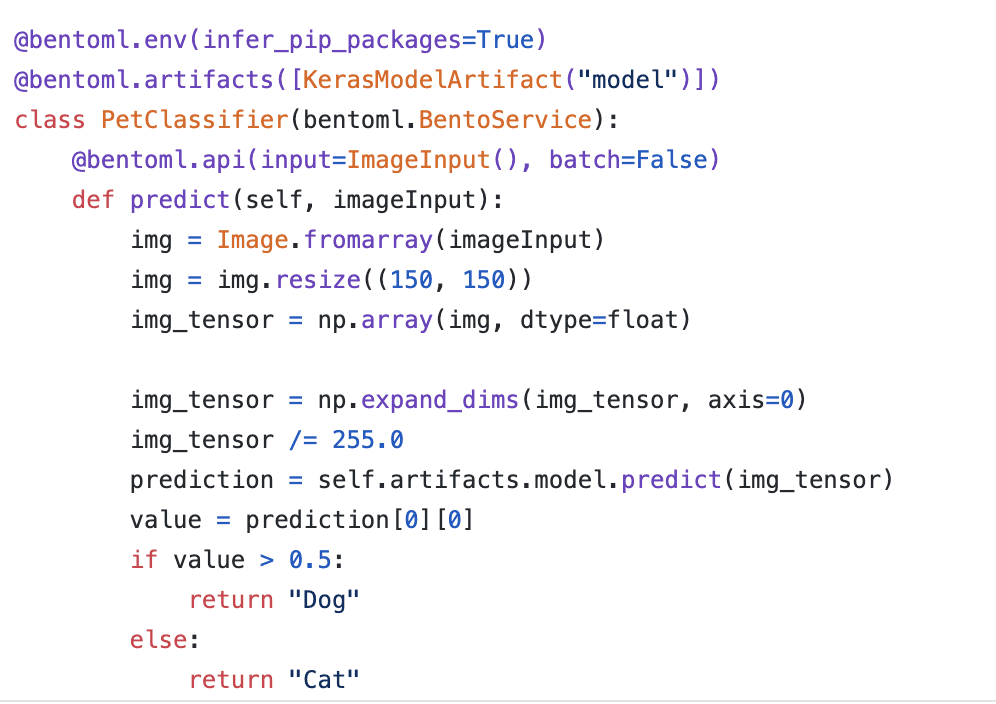

BentoML Service#

The BentoML Service in current case looks something like this:

https://github.com/bbakiu/bentoml-cat-dog-classifier/blob/main/pet_classifier_bentoml_service.py

It takes an image input in the request. The image is then transformed into a format the model can accept and serve a prediction on. After the prediction is made, the service returns if it is a cat or a dog and hopefully (at least most of the times) it is right.

Serving#

Here are the steps needed to serve the model in AWS EKS and the container image stored in ECR:

Upload The Image In ECR:#

BentoML has a very good documentation on how to containerize the service and make it available in ECR here. AWS Management Console has also very nice documentation and provides a simple set of commands that help in pushing the image to ECR.

Create Cluster With EKS CLI#

The easiest way to up create the cluster in EKS is via eksctl . Something like this would do well:

eksctl create cluster \ --name \ --version <1.18> \ --nodegroup-name standard-workers \ --node-type t3.micro \ --nodes \ --nodes-min 1 \ --nodes-max 4 \ --node-ami auto

Create Service And Deployment In Kubernetes#

To start running the service in EKS a kubernetes load balancer service and a deployment are required. You can check here or here for samples on how to do it.

Pro Tip: kubernetes is awesome and easy to start learning, play around with it.

And That’s It. Deploy And Enjoy.#

Check the endpoint of the service kubectl get endpoints to find the url on how the service can be accessed. Also AWS Management Console can be helpful in this case.

Here is my Github Repo.

Happy Coding ;)