The Best Open-Source LLMs in 2026

Authors

Last Updated

Share

The rapid rise of large language models (LLMs) has transformed how we build modern AI applications. They now power everything from customer support chatbots to complex LLM agents that can reason, plan, and take actions across tools.

For many AI teams, closed-source options like GPT-5 and Claude Sonnet 4 are convenient. With just a simple API call, you can prototype an AI product in minutes — no GPUs to manage and no infrastructure to maintain. However, this convenience comes with trade-offs: vendor lock-in, limited customization, unpredictable pricing and performance, and ongoing concerns about data privacy.

That’s why open-source LLMs have become so important. They let developers self-host models privately, fine-tune them with domain-specific data, and optimize inference performance for their unique workloads.

In this post, we’ll explore the best open-source LLMs. After that, we’ll answer some of the FAQs teams have when evaluating LLMs for production use.

What are open-source LLMs?#

Generally speaking, open-source LLMs are models whose architecture, code, and weights are publicly released so anyone can download them, run them locally, fine-tune them, and deploy them in their own infrastructure. They give teams full control over inference, customization, data privacy, and long-term costs.

However, the term “open-source LLM” is often used loosely. Many models are openly available, but their licensing falls under open weights, not traditional open source.

Open weights here means the model parameters are published and free to download, but the license may not meet the Open Source Initiative (OSI) definition of open source. These models sometimes have restrictions, such as commercial-use limits, attribution requirements, or conditions on how they can be redistributed.

The OSI highlights the key differences:

| Feature | Open Weights | Open Source |

|---|---|---|

| Weights & Biases | Released | Released |

| Training code | Not shared | Fully shared |

| Intermediate checkpoints | Withheld | Nice to have |

| Training dataset | Not shared or disclosed | Released (when legally allowed) |

| Training data composition | Partially disclosed or not disclosed | Fully disclosed |

Both categories allow developers to self-host models, inspect their behaviors, and fine-tune them. The main differences lie in licensing freedoms and how much of the model’s training pipeline is disclosed.

We won’t dive too deeply into the licensing taxonomy in this post. For the purposes of this guide, every model listed can be freely downloaded and self-hosted, which is what most teams care about when evaluating open-source LLMs for production use.

DeepSeek-V3.2#

DeepSeek came to the spotlight during the “DeepSeek moment” in early 2025, when its R1 model demonstrated ChatGPT-level reasoning at significantly lower training costs. The latest release, DeepSeek-V3.2, builds on the V3 and R1 series and is now one of the best open-source LLMs for reasoning and agentic workloads. It focuses on combining frontier reasoning quality with improved efficiency for long-context and tool-use scenarios.

At the core of DeepSeek-V3.2 are three main ideas:

- DeepSeek Sparse Attention (DSA). A sparse attention mechanism that significantly reduces compute for long-context inputs while preserving model quality.

- Scaled reinforcement learning. A high-compute RL pipeline that pushes reasoning performance into GPT-5 territory. The DeepSeek-V3.2-Speciale variant surpasses GPT-5 and reaches Gemini-3.0-Pro-level reasoning on benchmarks such as AIME and HMMT 2025.

- Large-scale agentic task synthesis. A data pipeline that blends reasoning with tool use. DeepSeek built 1,800+ distinct environments and 85,000+ agent tasks across search, coding, and multi-step tool-use to drive the RL process.

Why should you use DeepSeek-V3.2:

-

Frontier-level reasoning with better efficiency. Designed to balance strong reasoning with shorter, more efficient outputs, DeepSeek-V3.2 delivers top-tier performance on reasoning tasks while keeping inference costs in check. It works well for everyday tasks too, including chat, Q&A, and general agent workflows.

-

Built for agents and tool use. DeepSeek-V3.2 is the first in the series to integrate thinking directly into tool-use. It supports tool calls in both thinking and non-thinking modes.

-

Specialized deep-reasoning variant. DeepSeek-V3.2-Speciale is a high-compute variant tuned specifically for complex reasoning tasks like Olympiad-style math. It is ideal when raw reasoning performance matters more than latency or tool use, though it does not support tool calling currently. Note that it requires more token usage and cost relative to DeepSeek-V3.2.

-

Fully open-source. Released under the permissive MIT License, DeepSeek-V3.2 is free to use for commercial, academic, and personal projects. It's an attractive option for teams building self-hosted LLM deployments, especially those looking to avoid vendor lock-in.

If you’re building LLM agents or reasoning-heavy applications, DeepSeek-V3.2 is one of the first models you should evaluate. For deployment, you can pair it with high-performance runtimes like vLLM to get efficient serving out of the box.

Also note that DeepSeek-V3.2 requires substantial compute resources. Running it efficiently requires multi-GPU setups, like 8 NVIDIA H200 (141GB of memory) GPUs.

Learn more about other DeepSeek models like V3.1 and R1 and their differences.

MiMo-V2-Flash#

MiMo-V2-Flash is an ultra-fast open-source LLM from Xiaomi built for reasoning, coding, and agentic workflows. It’s a MoE model with 309B total parameters but only 15B active per token, giving it a strong balance of capability and serving efficiency. The model supports an ultra-long 256K context window and a hybrid “thinking” mode, so you can enable deeper reasoning only when needed.

A key reason behind MiMo-V2-Flash’s price-performance profile is the hybrid attention design. In a normal transformer, each new token can look at every previous token (global attention). That’s great for quality, but for long contexts it costs a lot of compute and it forces the model to keep a lot of KV cache.

MiMo takes a different approach. Most layers only attend to the latest 128 tokens using sliding-window attention, and only 1 out of every 6 layers performs full global attention (a 5:1 local-to-global ratio). This avoids paying the full long-context cost at every layer and delivers nearly a 6× reduction in KV-cache storage and attention computation for long prompts.

Why should you use MiMo-V2-Flash:

-

Top-tier coding agent performance. MiMo-V2-Flash outperforms open-source LLMs like DeepSeek-V3.2 and Kimi-K2 on software-engineering benchmarks, but with roughly 1/2-1/3x their total parameters. The results are even competitive with leading closed-source models like GPT-5.

-

Serious inference efficiency. Xiaomi positions MiMo-V2-Flash for high-throughput serving, citing around 150 tokens/sec and very aggressive pricing ($0.10 per million input tokens and $0.30 per million output tokens)

-

Built for agents and tool use. The model is trained explicitly for agentic and tool-calling workflows, spanning code debugging, terminal operations, web development, and general tool use.

A major part of this comes from their post-training strategy, Multi-Teacher Online Policy Distillation (MOPD). Instead of relying only on static fine-tuning data, MiMo learns from multiple domain-specific teacher models through dense, token-level rewards on its own rollouts. This allows the model to efficiently acquire strong reasoning and agentic behavior. For details, check out their technical report.

Kimi-K2#

Kimi-K2 is a MoE model optimized for agentic tasks, with 32 billion activated parameters and a total of 1 trillion parameters. It delivers state-of-the-art performance in frontier knowledge, math, and coding among non-thinking models. Three variants are open-sourced:

- Kimi-K2-Base for full-control fine-tuning

- Kimi-K2-Instruct-0905 (drop-in chat/agent use) improves agentic and front-end coding abilities and extends context length to 256K tokens

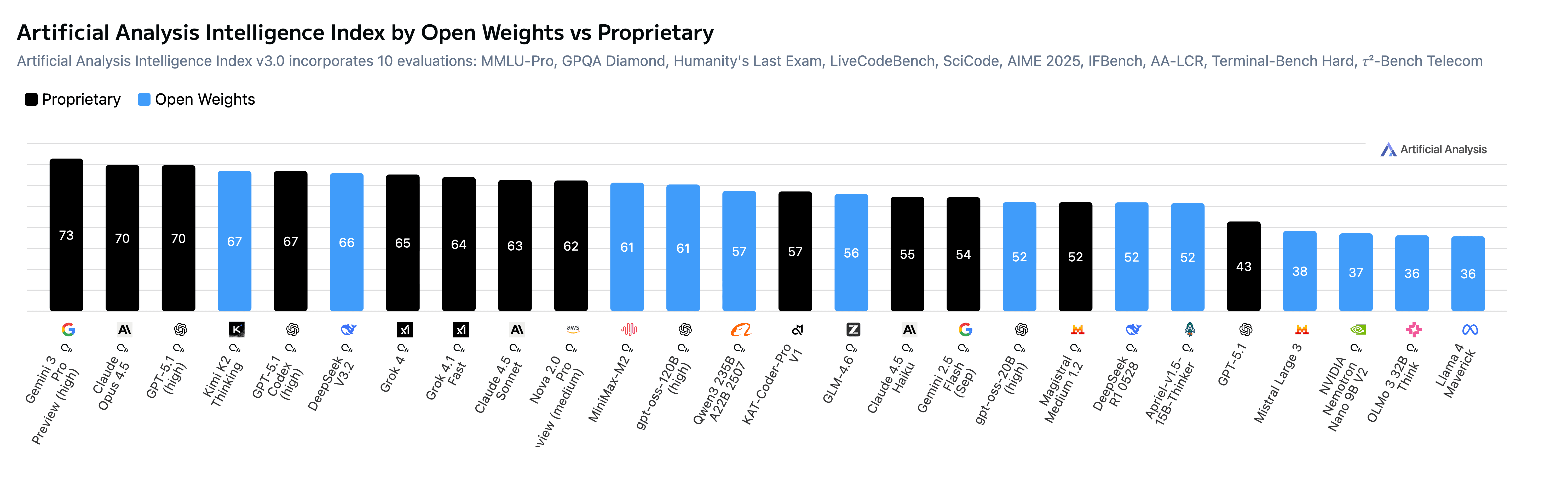

- Kimi-K2-Thinking is a reasoning variant of Kimi-K2-Instruct, with native INT4 quantization. It achieves a 67 in the Artificial Analysis Intelligence Index, above all other open weights models.

Why should you use Kimi-K2:

-

Agent-first design. The agentic strength comes from two pillars: large-scale agentic data synthesis and general RL. Inspired by ACEBench, K2’s pipeline simulates realistic multi-turn tool-use across hundreds of domains and thousands of tools, including real MCP tools + synthetic ones.

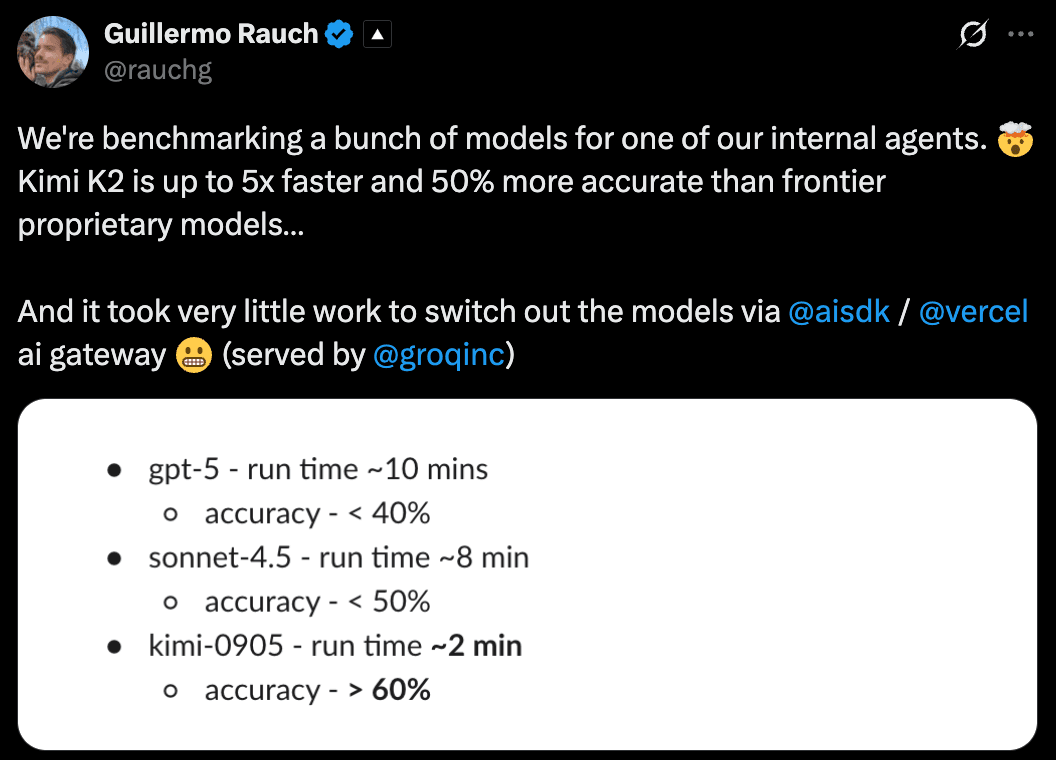

Some teams already saw great real-world results. For example, Guillermo Rauch, CEO of Vercel, mentioned that Kimi K2 ran up to 5× faster and was about 50% more accurate than some top proprietary models like GPT-5 and Claude-Sonnet-4.5 in their internal agent tests.

Image Source: Rauch's Original Post -

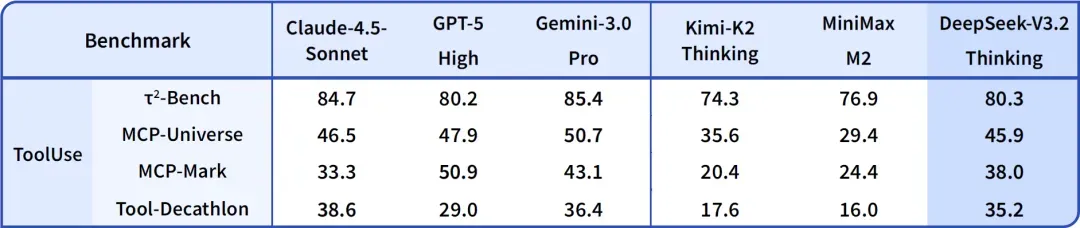

Competitive coding & tool use. In head-to-head evaluations, Kimi-K2-Instruct matches or outperforms open-source and proprietary models (e.g., DeepSeek-V3-0324, Qwen3-235B-A22B, Claude Sonnet 4, Gemini 2.5 Flash) on knowledge-intensive reasoning, code generation, and agentic tool-use tasks.

-

Long-context. Updated weights support 256K tokens, useful for agent traces, docs, and multi-step planning.

Note that Kimi-K2 is released under a modified MIT license. The sole modification: If you use it in a commercial product or service with 100M+ monthly active users or USD 20M+ monthly revenue, you must prominently display “Kimi K2” in the product’s user interface.

GLM-4.7#

GLM-4.5 was built with the goal of creating a truly generalist LLM. The team believes a strong LLM must go beyond just a single domain. It should combine problem solving, generalization, common-sense reasoning, and more capabilities into a single model.

To measure this, they focus on three pillars:

- Agentic abilities: interacting with external tools and the real world.

- Complex reasoning: solving multi-step problems in domains like mathematics and science.

- Advanced coding: tackling real-world software engineering tasks.

The result is the GLM-4.5 series, designed to unify reasoning, coding, and agentic abilities in one model.

GLM-4.6 built on this foundation with more balanced performance across benchmarks and real-world use cases. GLM-4.7 continues this trajectory, but represents a more important step forward in the areas that matter most for production agentic workflows: coding agents, terminal-based tasks, tool use, and stability over long multi-turn interactions.

Why should you use GLM-4.7:

-

Stronger coding agents and terminal workflows. GLM-4.7 shows clear gains on agentic coding benchmarks, matching or surpassing models like DeepSeek-V3.2, Claude Sonnet 4.5, and GPT-5.1. It’s explicitly tuned for modern coding-agent tools such as Claude Code, Cline, Roo Code, and Kilo Code.

-

Better tool use and web-style browsing tasks. It improves reliability on tool-heavy evaluations and browsing-style tasks, which is exactly where many agent systems fail in production.

-

Higher quality UI generation. GLM-4.7 focuses explicitly on cleaner, more modern webpages and better layout fidelity for slides.

-

More controllable multi-turn reasoning. GLM-4.7 builds on interleaved thinking and introduces features aimed at long-horizon stability:

- Interleaved Thinking: thinks before responses and tool calls for better instruction following.

- Preserved Thinking: retains prior thinking across turns in coding-agent scenarios to reduce drift and inconsistencies.

- Turn-level Thinking: lets you enable reasoning only when needed to manage latency and cost.

Check out the details of this feature in their documentation.

If your application involves reasoning, coding, and agentic tasks together, GLM-4.7 is a strong candidate. For teams with limited resources, GLM-4.5-Air FP8 is a more practical choice, which fits on a single H200.

In addition, I also recommend GLM-4.7-Flash. It’s a lightweight 30B MoE model with strong agentic performance and better serving efficiency (e.g., for local coding and agentic tasks).

gpt-oss-120b#

gpt‑oss‑120b is OpenAI’s most capable open-source LLM to date. With 117B total parameters and a Mixture-of-Experts (MoE) architecture, it rivals proprietary models like o4‑mini. More importantly, it’s fully open-weight and available for commercial use.

OpenAI trained the model with a mix of reinforcement learning and lessons learned from its frontier models, including o3. The focus was on making it strong at reasoning, efficient to run, and practical for real-world use. The training data was mostly English text, with a heavy emphasis on STEM, coding, and general knowledge. For tokenization, OpenAI used an expanded version of the tokenizer that also powers o4-mini and GPT-4o.

The release of gpt‑oss marks OpenAI’s first fully open-weight LLMs since GPT‑2. It has already seen adoption from early partners like Snowflake, Orange, and AI Sweden for fine-tuning and secure on-premises deployment.

Why should you use gpt‑oss‑120b:

-

Excellent performance. gpt‑oss‑120b matches or surpasses o4-mini on core benchmarks like AIME, MMLU, TauBench, and HealthBench (even outperforms proprietary models like OpenAI o1 and GPT‑4o).

-

Efficient and flexible deployment. Despite its size, gpt‑oss‑120b can run on a single 80GB GPU (e.g., NVIDIA H100 or AMD MI300X). It's optimized for local, on-device, or cloud inference via partners like vLLM, llama.cpp and Ollama.

-

Adjustable reasoning levels. It supports low, medium, and high reasoning modes to balance speed and depth.

- Low: Quick responses for general use.

- Medium: Balanced performance and latency

- High: Deep and detailed analysis.

-

Permissive license. gpt‑oss‑120b is released under the Apache 2.0 license, which means you can freely use it for commercial applications. This makes it a good choice for teams building custom LLM inference pipelines.

Deploy gpt-oss-120b with vLLMDeploy gpt-oss-120b with vLLM

Qwen3-235B-A22B-Instruct-2507#

Alibaba has been one of the most active contributors to the open-source LLM ecosystem with its Qwen series. Qwen3 is the latest generation, offering both dense and MoE models across a wide range of sizes. At the top of the lineup is Qwen3-235B-A22B-Instruct-2507, an updated version of the earlier Qwen3-235B-A22B’s non-thinking mode.

This model has 235B parameters, with 22B active per token, powered by 128 experts (8 active). Note that it only supports non-thinking mode and does not generate <think></think> blocks. You can try Qwen3-235B-A22B-Thinking-2507 for more complex reasoning tasks.

Why should you use Qwen3-235B-A22B-Instruct-2507:

- State-of-the-art performance. The model sees significant gains in instruction following, reasoning, comprehension, math, science, coding, and tool use. It outperforms models like GPT-4o and DeepSeek-V3 on benchmarks including GPQA, AIME25, and LiveCodeBench.

- Ultra-long context. It features a context length of 262,144 natively and extendable up to over 1 million tokens. This makes it a perfect choice for systems like AI agents, RAG, and long-term conversations. Keep in mind that to process sequences at this scale, you need around 1000 GB of GPU memory (model weights, KV-cache storage, and peak activation memory demands).

- Multilingual strength. Instruct-2507 supports 100+ languages and dialects, with better coverage of long-tail knowledge and stronger multilingual instruction-following than previous Qwen models.

The Qwen team does not stop with Instruct-2507. They note a clear trend: scaling both parameter count and context length for building more powerful and agentic AI. Their answer is the Qwen3-Next series, which focuses on improved scaling efficiency and architectural innovations.

The first release, Qwen3-Next-80B-A3B, comes in both Instruct and Thinking versions. The instruct variant performs on par with Qwen3-235B-A22B-Instruct-2507 on several benchmarks, while showing clear advantages in ultra-long-context tasks up to 256K tokens.

Since Qwen3-Next is still very new, there’s much more to explore. We’ll be sharing more updates later.

Deploy Qwen3-235B-A22B-Instruct-2507Deploy Qwen3-235B-A22B-Instruct-2507

MiniMax-M2.1#

MiniMax-M2.1 is an agent-focused LLM that brings top-tier autonomous capabilities into production. With 230B parameters (10B active), 60 tokens/sec output speed, and a 204,800-token context window, MiniMax-M2.1 represents a reliable choice for agentic coding and automation tasks.

Beyond the model itself, MiniMax also introduced VIBE (Visual & Interactive Benchmark for Execution in Application Development), a benchmark that evaluates a model’s ability to build complete, functional applications “from zero to one.” VIBE covers five core areas (Web, Simulation, Android, iOS, and Backend) and MiniMax-M2.1 delivers strong results overall, with particularly high scores on VIBE-Web (91.5) and VIBE-Android (89.7).

Why should you use MiniMax-M2.1:

- Exceptional multi-language programming. MiniMax-M2.1 is tuned beyond Python, with systematic upgrades across languages like Rust, Java, Go, C++, Kotlin, Objective-C, TypeScript, and JavaScript. This means you can use it for real-world codebases that span multiple stacks. It also shows stable performance across popular coding-agent frameworks such as Claude Code, Cline, and Kilo Code.

- Web + mobile app development with better vibe coding. It improves both execution and aesthetics for Web/AppDev, including stronger native Android and iOS capability and better design comprehension for interactive apps.

- More reliable agent behavior. MiniMax-M2.1 strengthens interleaved thinking and focuses on executing composite instruction constraints. Simply put, it can follow multiple simultaneous rules such as system prompts, tool schemas, memory files, and specifications (e.g.,

Agents.mdandClaude.md). This is exactly where many agent systems break down in real office and enterprise workflows.

Note that MiniMax-M2.1 is released under a modified MIT license. The only restriction is that if you use the model (or derivative works) in your commercial product, you must explicitly display the name “MiniMax M2.1” in the user interface.

Ling-1T#

Developed by InclusionAI, Ling-1T is a trillion-parameter non-thinking model built on the Ling 2.0 architecture. It represents the frontier of efficient reasoning, featuring an evolutionary chain-of-thought (Evo-CoT) process across mid-training and post-training stages.

With 1 trillion total parameters and ≈ 50 billion active per token, Ling-1T uses a MoE design optimized through the Ling Scaling Law for trillion-scale stability. The model was trained on more than 20 trillion high-quality, reasoning-dense tokens, supporting up to 128K context length.

Why should you use Ling-1T:

- Efficient reasoning. Ling-1T expands the Pareto frontier between reasoning accuracy and length on tasks like AIME 25. It demonstrates advanced reasoning compression, maintaining high accuracy with fewer generated tokens. Across major math, reasoning and code benchmarks, it outperforms or matches top models like DeepSeek-V3.1-Terminus, GPT-5-main, and Gemini-2.5-Pro.

- Emergent intelligence at trillion-scale. The model exhibits strong emergent reasoning and transfer capabilities. Without extensive trajectory fine-tuning, Ling-1T achieves around 70 % tool-call accuracy (BFCL V3). It can Interpret complex natural-language instructions and transform abstract logic into functional visual components. However, the current release still has room for improvement in multi-turn interaction, long-term memory, and tool use.

- Aesthetic and front-end generation strength. With its hybrid Syntax–Function–Aesthetics reward mechanism, Ling-1T produces not only functional code but also visually refined front-end layouts. It currently ranks first among open-source models on ArtifactsBench. This is especially useful for building applications that combine reasoning and UI generation.

Llama 4 Scout and Maverick#

Meta’s Llama series has long been a popular choice among AI developers. With Llama 4, the team introduces a new generation of natively multimodal models that handle both text and images.

The Llama 4 models all use the MoE architecture:

- Llama 4 Scout: 109B total parameters (17B active), 16 experts.

- Llama 4 Maverick: 400B total parameters (17B active), 128 experts.

- Llama 4 Behemoth (preview): 2T total parameters (288B active), 16 experts. Still in training, Behemoth already outperforms GPT-4.5, Claude Sonnet 3.7, and Gemini 2.0 Pro on several STEM benchmarks.

Why should you use Llama 4 Scout and Maverick:

-

Strong performance. Because of distillation from Llama 4 Behemoth, both Scout and Maverick are the best multimodal models in their classes. Maverick outperforms GPT-4o and Gemini 2.0 Flash on many benchmarks (like image understanding and coding), and is close to DeepSeek-V3.1 in reasoning and coding, with less than half the active parameters.

-

Efficient deployment.

- Scout can fit on a single H100 GPU with on-the-fly INT4 quantization (released in BF16).

- Maverick is officially available in BF16 and FP8 quantized weights. The FP8 version fits on a single H100 DGX host while maintaining strong quality.

-

Safety and reliability. Llama 4 Scout and Maverick were released with built-in safeguards to help developers integrate them responsibly. This includes alignment tuning during post-training, safety evaluations against jailbreak and prompt injection attacks, and support for open-source guard models such as Llama Guard and Prompt Guard.

The specific decision between Llama 4 Scout and Maverick depends on your use case:

- If you need to handle long-context scenarios (up to 10M tokens) or your compute is limited, choose Llama 4 Scout.

- If you need best-in-class multimodal performance for reasoning, coding, and visual tasks, choose Llama 4 Maverick.

Also note that it’s been almost 8 months since the Llama 4 models were released as of this writing. Newer open-source and proprietary LLMs have already surpassed them in certain areas.

Deploy Llama-4-Scout-17B-16E-InstructDeploy Llama-4-Scout-17B-16E-Instruct

Now let’s take a quick look at some of the FAQs around LLMs.

What is the best open-source LLM now?#

If you’re looking for a single name, the truth is: there isn’t one. The “best” open-source LLM always depends on your use case, compute budget, and priorities.

That said, if you really want some names, here are commonly recommended open-source LLMs for different use cases.

- Reasoning: DeepSeek-V3.2-Speciale

- Coding assistants: GLM-4.7, MiniMax-M2.1

- Agentic workflows: MiMo-V2-Flash, Kimi-K2

- General chat: Qwen3-235B-A22B-Instruct-2507, DeepSeek-V3.2

- Story writing & creative tasks: Qwen3-235B-A22B-Instruct-2507, Llama 4 Maverick

These suggestions are for reference only. Use these as starting points, not canonical answers. The “best” model is the one that fits your product requirements, works within your compute constraints, and can be optimized for your specific tasks.

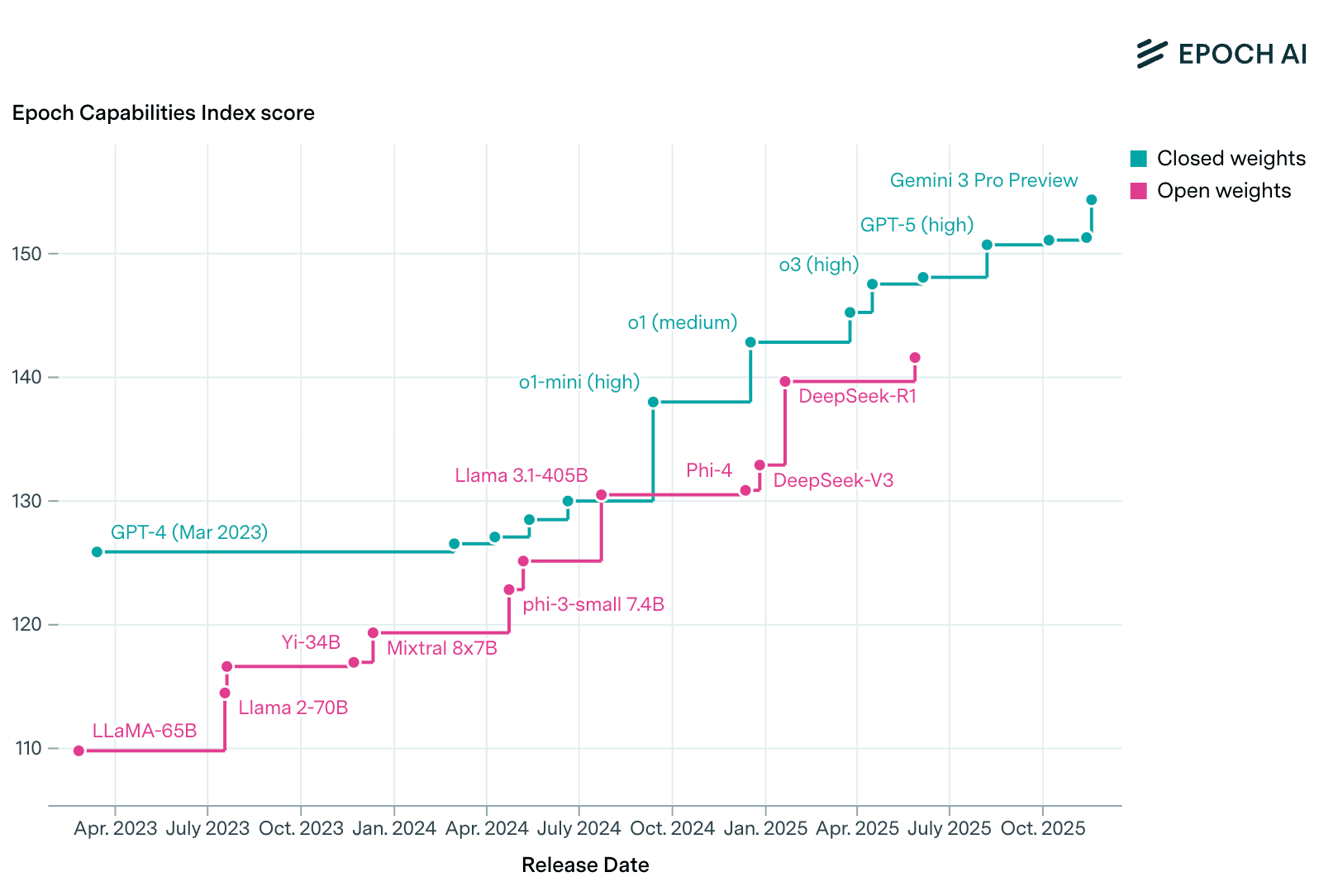

The open-source LLM space is evolving quickly. New releases often outperform older models within months. In other words, what feels like the best today might be outdated tomorrow.

If you are looking for models that can run in resource-constraint environments, take at look at the top small language models (SLMs).

Instead of chasing the latest winner, it’s better to focus on using a flexible inference platform that makes it easy to switch between frontier open-source models. This way, when a stronger model is released, you can adopt it quickly as needed and apply the inference optimization techniques you need for your workload.

Why should I choose open-source LLMs over proprietary LLMs?#

The decision between open-source and proprietary LLMs depends on your goals, budget, and deployment needs. Open-source LLMs often stand out in the following areas:

- Customization. You can fine-tune open-source LLMs for your own data and workloads. Additionally, you can apply inference optimization techniques such as speculative decoding, prefix caching and prefill-decode disaggregation for your performance targets. Such custom optimizations are not possible with proprietary models.

- Data security. Open-source LLMs can be run locally, or within a private cloud infrastructure, giving users more control over data security. By contrast, proprietary LLMs require you to send data to the provider’s servers, which can raise privacy concerns.

- Cost-effectiveness. While open-source LLMs may require investment in infrastructure, they eliminate recurring API costs. With proper LLM inference optimization, you can often achieve a better price-performance ratio than relying on commercial APIs.

- Community and collaboration. Open-source projects benefit from broad community support. This includes continuous improvements, bug fixes, new features, and shared best practices driven by global contributors.

- No vendor lock-in. Using open-source LLMs means you don’t rely on a single provider’s roadmap, pricing, or availability.

How big is the gap between open-source and proprietary LLMs?#

The gap between open-source and proprietary LLMs has narrowed dramatically, but it is not uniform across all capabilities. In some areas, open-source models are now competitive or even leading. In others, proprietary frontier models still hold a meaningful advantage.

According to Epoch AI, open-weight models now trail the SOTA proprietary models by only about three months on average.

Here is a summary of the current gap:

| Use case | Gap size | Notes |

|---|---|---|

| Coding assistants & agents | Small | Open models like GLM-4.6 or Kimi-K2 are already strong |

| Math & reasoning | Small | DeepSeek-V3.2-Speciale reaches GPT-5-level performance |

| General chat | Small | Open models increasingly match Sonnet / GPT-5-level quality |

| Multimodal (image/video) | Moderate–Large | Closed models currently lead in both performance and refinement |

| Extreme long-context + high reliability | Moderate | Proprietary LLMs maintain more stable performance at scale |

How to differentiate my LLM application#

As open-source LLMs close the gap with proprietary ones, you no longer gain an big edge by switching to the latest frontier model. Real differentiation now comes from how well you adapt the model and inference pipeline to your product, focusing on performance, cost, and domain relevance.

One of the most effective ways is to fine-tune a smaller open-source model on your proprietary data. Fine-tuning lets you encode domain expertise, user behavior patterns, and brand voice, which cannot be replicated by generic frontier models. Smaller models are also far cheaper to serve, improving margins without sacrificing quality.

To get meaningful gains:

- Build a high-quality, task-focused dataset based on your actual user interactions

- Identify the workflows where specialization has the biggest impact

- Fine-tune small models that can outperform larger models on your specific tasks

- Optimize inference for latency, throughput, and cost (see the next FAQ for details)

Note that this is something you can’t easily do with proprietary models behind serverless APIs due to data security and privacy concerns.

How can I optimize LLM inference performance?#

One of the biggest benefits of self-hosting open-source LLMs is the flexibility to apply inference optimization for your specific use case. Frameworks like vLLM and SGLang already provide built-in support for inference techniques such as continuous batching and speculative decoding.

But as models get larger and more complex, single-node optimizations are no longer enough. The KV cache grows quickly, GPU memory becomes a bottleneck, and longer-context tasks such as agentic workflows stretch the limits of a single GPU.

That’s why LLM inference is shifting toward distributed architectures. Optimizations like prefix caching, KV cache offloading, data/tensor parallelism, and prefill–decode disaggregation are increasingly necessary. While some frameworks support these features, they often require careful tuning to fit into your existing infrastructure. As new models are released, these optimizations may need to be revisited.

At Bento, we help teams build and scale AI applications with these optimizations in mind. You can bring your preferred inference backend and easily apply the optimization techniques for best price-performance ratios. Leave the infrastructure tuning to us, so you can stay focused on building applications.

What should I consider when deploying LLMs in production?#

Deploying LLMs in production can be a nuanced process. Here are some strategies to consider:

- Model size: Balance accuracy with speed and cost. Smaller models typically deliver faster responses and lower GPU costs, while larger models can provide more nuanced reasoning and higher-quality outputs. Always benchmark against your workload before committing.

- GPUs: LLM workloads depend heavily on GPU memory and bandwidth. For enterprises to self-host LLMs (especially in data centers), NVIDIA A100, H200, B200 or AMD MI300X, MI350X, MI355X are common choices. Similarly, benchmark your model on the hardware you plan to use. Tools like llm-optimizer can quickly help find the best configuration.

- Scalability: Your deployment strategy should support autoscaling up or down based on demand. More importantly, it must happen with fast cold starts or your user experience suffers.

- LLM-specific observability: Apart from traditional monitoring, logging and tracing, also track inference metrics such as Time to First Token (TTFT), Inter-Token Latency (ITL), and token throughput.

- Deployment patterns: How you deploy LLMs shapes everything from latency and scalability to privacy and cost. Each pattern suits different operational needs for enterprises: BYOC, multi-cloud and cross-region, on-prem and hybrid

Final thoughts#

The rapid growth of open-source LLMs has given teams more control than ever over how they build AI applications. They are closing the gap with proprietary ones while offering unmatched flexibility.

At Bento, we help AI teams unlock the full potential of self-hosted LLMs. By combining the best open-source models with tailored inference optimization, you can focus less on infrastructure complexity and more on building AI products that deliver real value.

To learn more about self-hosting LLMs:

- Read our LLM Inference Handbook

- Benchmark and optimize LLM performance with llm-optimizer

- Join the BentoML Slack community to connect with other builders

- Sign up for our inference platform and deploy your first LLM in the cloud

- Schedule a call with us to discuss your LLM use case