Productionizing Fastai Models

Mar 11, 2022 • Written By Jithin James

Fastai+BentoML = 🔥

I owe a lot to the FastAI community and I’m pretty sure a lot the folks I know will say the same. A whole legion of ML engineers has been brought up with Jeremy's tutorial and his top-down approach.

The FastAI library is another contribution from the community that encapsulates the top-down approach they take. It's simple enough that you can get started with a couple of lines of code and still powerful enough to handle most use-cases you might come across on your ML journey. It's so well designed that we have people saying

I think of Jeremy Howard as the Don Norman of Machine Learning. — Mark Saroufim

But one thing I feel that needs a bit more work is deployment. With FastAI you’ll get a trained model but you have to either write your own backend to serve the model or use some other service that does this for you. In this blog, I’ll like to introduce a stack that I’ve found to play very well with FastAI

Intro BentoML

I’ve talked about BentoML before. I think of it as a wrapper around your trained models which then make it super easy to deploy and manage APIs. It's easy to get started and packs a lot of the best practices in-build so you have a fairly performant API right from the start. BentoML supports a wide range of ML frameworks (full list here) and supports a whole lot of deployment options too (list here). This makes it super easy to use with your existing workflow and still gain all of the benefits.

In this blog, I’ll show you how you can take your FastAI model and easily push it into production as a polished API with the help of BentoML. We can then use a wide range of deployment options that BentoML support.

now let's build a useful model to train to start off

FastAI Dog V/S Cat

All those who have gone through the course will have already build this model and seen it in action. It's a simple model, to identify given a picture whether its a dog or a cat. It’s part of the official FastAI tutorial and you can check it out to dig deeper into it. (Those who have not gone through the tutorial or are new to the FastAI library, I’d recommend going through the Fastai tutorial first to get a better understanding)

First, let's get the dataloaders setup

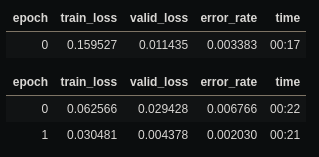

# load all the required modules from fastai.vision.all import * from pathlib import Path # download and check the data path = untar_data(URLs.PETS) print(path.ls()) # load image files files = get_image_files(path/"images") print(len(files)) # 7390 # the function to label a given image as Dog or Cat ie the Ys # the file names that begin with caps are Cats and the others are # dogs so we will use that logic. def label_func(f): return str(f)[0].isupper() # build dataloader for Learner dls = ImageDataLoaders.from_name_func(path, files, label_func, item_tfms=Resize(224)) # lets visulaize one batch dls.show_batch()

The output from dls.show_batch(). (Source — Oxford-IIIT pets dataset)

Now we have the data ready, let's train the Learner on it.

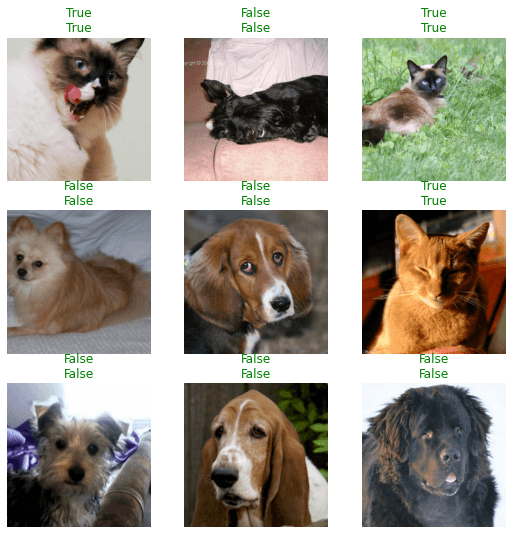

learner = cnn_learner(dls, resnet34, metrics=error_rate) learner.fine_tune(2) # Show predictions on a batch learner.show_results()

(above) the output of the learner showing train and validation loss for 2 epochs. (below) the predictions from the model and as you can see we have a very good model trained. (Source: Oxford-IIIT Pets dataset)

and with a couple of lines of code, we have a very strong Dog v/s Cat classifier. Its fast AI bro.

Making It Ready To Ship

Now that you’ve got a nice model trained, let's get this party started! We’ll first try to save the model with the FastAI export function and run inference on that. By default, the learner.export(fname) function will save the contents of learner without the dataset items and optimizer state for inferencing into the learner.path directory. To load the model, you can call the load_learner(learner.path/fname). Now one thing to know here is that export() uses python’s pickles utility to serialise the learner so any custom code for models, data transformations, loss function will not be saved and have to be loaded before calling the load_learner(). In our modest example, we have used a label_func() to label the images as dog or cat. This is a custom function that becomes attached to the learner and hence when we load the model, we have to add this into the same module also. To make this easier we move all these custom code into another file that we will call as dogvscat_utils.py. Bento will pack this utility function also when exporting.

p = learner.path learner.export('model.pkl') print('Saved to:', learner.path) # now lets load the exported model from dogvscat_utils import label_func loaded_learner = load_learner(p/'model.pkl')

Saved to: /home/jithin/.fastai/data/oxford-iiit-pet

Now that you have your model loaded you have to create a test dataloader to get the predictions and use the learners get_preds() to get the prediction for all the items in the dataloader. Download a bunch of images of cats and dogs and keep it in the folder, we’ll do the prediction on those.

# load all the files in a dir files = get_image_files('images') print('Files for inference: ', files) # make the test dataloader dl = loaded_learner.dls.test_dl(files) print(dl) # perform the predictions loaded_learner.get_preds(dl=dl)

(#6) [Path('images/c1.jpg'),Path('images/d2.jpg'),Path('images/c2.jpg'),Path('images/d3.jpg'),Path('images/c3.jpg'),Path('images/d1.jpg')] (tensor([[4.7564e-06, 1.0000e+00], [9.9999e-01, 1.1712e-05], [5.6602e-12, 1.0000e+00], [9.9947e-01, 5.2984e-04], [2.6386e-18, 1.0000e+00], [9.9998e-01, 1.9747e-05]]), None)

Now that you’ve got an idea of how to do inference using FastAI, the next goal is to get this behind an API. You can use something like FastAPI and its fairly easy to set up but we’ll be using bento because its batteries included. To use BentoML with your model you first have to wrap the prediction function of your model within a BentoService. The bento service is used to specify a few things

1. Which framework is used to train the model. bentoml.frameworks

2. The input type the API is expecting and how to handle it. bentoml.adapters

3. How the API should take the input, do the inference and process the output. There could be cases where the output from one model could be the input to another model, so all that logic goes in there. predict() function inside.

Based on the above information, Bentoml will decide the best way to pack and serve your model. I’ve built a sample BentoService for our FastAI model, let's see that and I’ll break it down and explain.

# save to bentoservice.py import bentoml from bentoml.frameworks.fastai import FastaiModelArtifact from bentoml.adapters import FileInput import dogvscat_utils # articats are the models, json files or any other file that is requried for inference. @bentoml.artifacts([FastaiModelArtifact('learner')]) # the env decorator handles all the environment. You can include all the pip and conda # packages you use here and they will be packed with the model. @bentoml.env(infer_pip_packages=True) # the BentoService that handles the model. class DogVCatService(bentoml.BentoService): # You interact with the data though HTTP request, you have to convert that into # what ever format your model is expecting like pandas dataframe, numpy array etc. # The api and input adapters handles this converstion for you @bentoml.api(input=FileInput(), batch=True) def predict(self, files): files = [i.read() for i in files] dl = self.artifacts.learner.dls.test_dl(files, rm_type_tfms=None, num_workers=0) inp, preds, _, dec_preds = self.artifacts.learner.get_preds(dl=dl, with_input=True, with_decoded=True) return [bool(i) for i in dec_preds]

If you want a more in-depth introduction, check out their core concepts page. Now you have a bentoservice file saved and ready. Using this we can pack and save the FastAI model.

The idea of packing is bento is all about. Its takes your model and all its dependencies and packs it into a standard format that then makes it easy to deploy to whichever service you want. To pack our DogVCatService, we have to initialise the service and call its pack() function. This checks all the dependencies, builds the docker files and packages it into a standard form. Now you can call save() function to save the packed service into its own version.

from bentoservice import DogVCatService svc = DogVCatService() svc.pack('learner', loaded_learner) svc.save()

[2021-01-19 23:20:42,967] WARNING - BentoML by default does not include spacy and torchvision package when using FastaiModelArtifact. To make sure BentoML bundle those packages if they are required for your model, either import those packages in BentoService definition file or manually add them via `@env(pip_packages=['torchvision'])` when defining a BentoService [2021-01-19 23:20:42,970] WARNING - pip package requirement torch already exist [2021-01-19 23:20:42,971] WARNING - pip package requirement fastcore already exist [2021-01-19 23:20:42,974] WARNING - pip package requirement fastai>=2.0.0 already exist [2021-01-19 23:20:44,734] INFO - BentoService bundle 'DogVCatService:20210119232042_EF1BDC' created at: /home/jithin/bentoml/repository/DogVCatService/20210130121631_7B4EDB

Done! You’ve now created you first BentoService. To see it in action go to the command line and run bentoml serve DogVCatService:latest. This will launch the dev server and if you head over to localhost:5000 you can see your model’s API in action.

Deploying You Packed Models

As I mentioned earlier BentoML supports a wide variety of deployment options (you can check the whole list here). You can choose the deployment that works for you from that. In this section, I’ll show you how to containerize Bentoml models since that is a popular way of distributing a model API.

If you have docker setup simply run

bentoml containerize DogVCatService:latest

to produce the docker container that is ready to serve our DogVCatService.

[2021-01-31 12:40:24,084] INFO - Getting latest version DogVCatService:20210130144339_7E6221 |Build container image: dogvcatservice:20210130144339_7E6221

Now you can run the docker container by running

docker run --rm -p 5000:5000 dogvcatservice:{container version}

Head over to localhost:5000 to see the swagger UI or run use curl to get the response

curl -X POST \ --data-binary @test_images/d1.jpg \ localhost:5000/predict

Its should return true for cats and false for dogs.

Conclusion

And there you have it, you have successfully created a production level API for your FastAI model with just a couple lines of code (truly FastAI style 😉). There is also a GitHub repo accompanying this blog. Check it out if you want to experiment with the finished version.