ML Model Reproducibility: Predicting COVID

Last Updated

Share

ML Model Reproducibility: Predicting COVID

Apr 25, 2022 • Written By Spencer Churchill

Creating machine learning models that have the potential to change the world is one of the most rewarding aspects of a data scientist’s job. But in order to get any ML model into the real world, it needs to be deployed in a scalable and repeatable way — a task much easier said than done.

The engineering hurdles in the final stretch are often both time-consuming and challenging to solve. In fact, a report from Algorithima found that for many organizations, over 50% of their data scientists’ time is spent on deploying machine learning models to production.

I learned this first-hand during a 48-hour medical hackathon I took part in during 2020. In this post, I’ll share my journey (ahem, my *mistakes*) and lessons learned from deploying a COVID cough classification model so that you don’t fall victim to the same pitfalls.

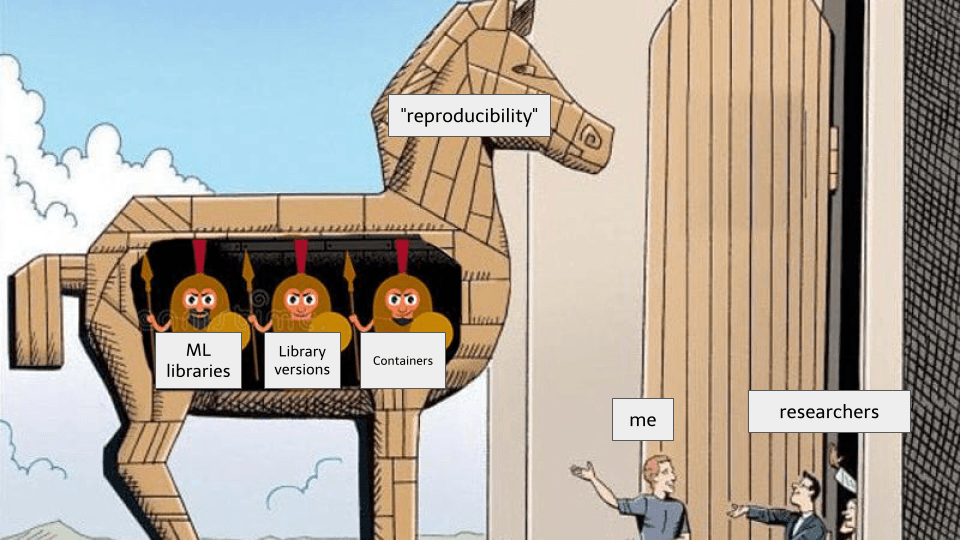

Foreshadowing

A Little Background On The Project#

The hackathon’s goal was to create a model that could classify COVID from audio recordings of coughs from patients. We leveraged a pre-existing dataset of recorded coughs, converted the audio files into spectrogram images, then used a Convolutional Neural Network (CNN) because of its efficacy in classifying images.

Within the first 24 hours, we trained the model to the point where we could, with 80%+ accuracy, detect COVID from coughs. With the model nearing completion, victory seemed in sight! We had done the impossible and the world would cheer as we saved lives one cough at a time. 😉

When it came time to integrate the model into the server, I exported the model weights, preprocessed the cough data from the microphone, and used the model to classify the cough data. Evaluating the model on my Macbook yielded an accuracy rate of around 83%. However, when the same dataset was evaluated on the server where we had planned to host it, the model performed abysmally!

Fail Whale is not enjoying deployment

What I Tried#

The first thing I did was follow PyTorch’s recommendation of saving the model’s weights (`model.state_dict()`).

That didn’t work.

Next, I tried pickling the entire `model` with `pytorch.save`, thinking that if the class methods were packaged alongside the weights I could avoid issues.

That also didn’t work.

I reasoned to myself that if the package versions were the same, the model should work in the same way. So I used `pip freeze` to find the precise dependencies in the environment and sent over the packaged version, and…

The model failed the evaluation again.

The end of the 48 hours was approaching quickly when it dawned on me that I had been developing my model on MacOS, and the server was hosted on one of my teammate’s machines in Windows. Moving from Mac to Windows can certainly produce errors caused by differences in library binaries.

Shoot… well, it was too late in the game to compensate for this, so we ended up just hosting the model on my laptop and making API calls to it from the server. 🤷

What I Learned#

A few days after the hackathon ended, I began digging into what went wrong during deployment. It turns out that while my attempts to course-correct were going in the right direction, ML model deployment is extremely nuanced, and is only successful when you’ve worked through specific considerations and steps in succession, holistically.

So, what did I learn in the end?

Lesson #1: Save Your Model Based On The Framework Format#

Popular ML Frameworks

Depending on the framework, there are best practices regarding how to save the model. The key is usually understanding the tradeoffs depending on the different methods. In our case during the hackathon, Pytorch recommended the “state_dict” method, but this solution can be brittle because it’s tied to the model class at the exact moment the method was called. Alternatively, the “save” method saves the entire model, but in order to load the model back, the Pytorch documentation states that the class must be in the exact same directory structure as when the model was saved. In hindsight, having done more research, Pytorch gives you the ability to customize the save and load behavior to account for these edge cases, but either way extra care is needed.

Lesson #2: Use The Same Dependency Versions#

Use “pip freeze” to determine which exact versions to use

Freezing and pinning to the exact versions and transitive dependencies which the model was trained is a good way to create reproducible results. It reduces the risk of different Python libraries handling arguments differently, whether that is changing default arguments or what operations are applied to those arguments. Tools like poetry and pip-tools can help to keep your dependencies organized as well.

Lesson #3: Use Containerized Environments#

Docker is the standard for containerization

When you’re developing a model in one operating system, yet deploying it in another (in our case, MacOS and Windows, respectively) it’s a good indication to use containerized environments like Docker, Nix, or another package handler. If the operating system and machine remain constant from development to production the results should be reproducible. During the hackathon, by the time we realized what we should’ve done, it was a bit too late for us — which is why we ended up running the classifier on my laptop.

Lesson #4: Version Each Model#

As I went through this exercise of trying to figure out where it all went wrong, there were many different saved versions of my model that I didn’t know which one was which. At the time, I didn’t know that similarly with code, versioning your models can prevent you from getting lost in the various iterations that you go through. In production, it’s particularly important because if you ever need to do A/B testing or rollback a bad model, you’ll have the necessary means to do so.

ML Model Serving Made Easy With BentoML#

During my post-hackathon retrospective, I discovered that I wasn’t the only one experiencing those challenges — and even better, I discovered that there was a solution!

Enter: BentoML, an open source ML model serving framework that automates the serving and deployment process.

All of those lessons I learned from earlier are actually built right into their offering. You specify the packages and system you’re using, and BentoML will configure the correct Docker container, package the needed dependencies, as well as optimize your deployment with high performance features like adaptive micro-batching baked right into the service.

Let’s recreate the situation above using BentoML for serving the model.

bentoml.pytorch.save(“my_model”, model)

This one command inspects your model and determines the best way to save it based on recommended best practices. Every library is a little different so there is never one way that works.

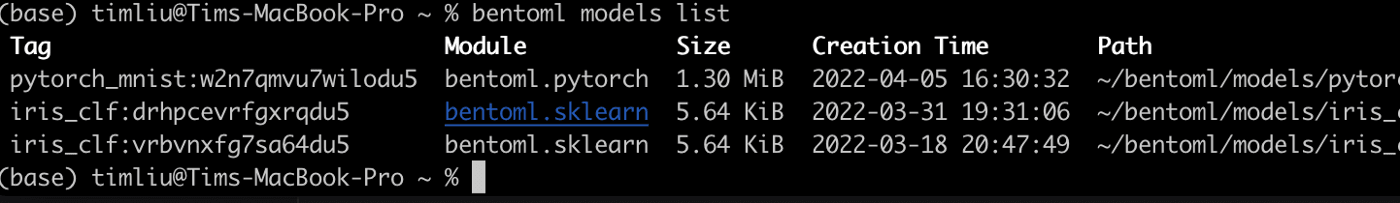

bentoml models list

Every time you save your model, it is versioned with all of the current dependencies and their corresponding versions. If you ever have a working snapshot, you can always revert back to it if needed. Additionally, you can push the models to a remote model registry (this is their Yatai service).

bentoml.pytorch.load(“my_model”)

It’s that easy to load the model back in whatever context that you’d like.

Now to deploy your service, you’ll use a straightforward configuration file.

# bentofile.yaml service: “service.py:svc” # A convention for locating your service: description: “file: ./README.md” include: - “*.py” # A pattern for matching which files to include in the bento python: packages: - pytorch # Additional libraries to be included in the bento docker: distro: debian gpu: True

The bentofile.yaml defines which files belong in the deployable and the corresponding dependencies. This way, the service is as lightweight as possible.

bentoml build

bentoml containerize

Finally when building your bento, the library will freeze your dependency versions and package them into one deployable package, along with your model and service code. Then, to reduce any additional complications, BentoML will create a docker container that provisions native libraries and can run your code independent of any operating system nuances.

Your models, dependencies and code all packaged into a delicious “Bento”

Conclusion#

While this was just a friendly hackathon meant to teach and challenge us during the pandemic, it is also the reality for many machine learning projects. The ML libraries have come so far these last 10 years, but operationalizing these models still requires engineering work that is fairly nuanced, presenting unique challenges that differ from traditional software operations.

The good news is that solutions like BentoML have hit the market to solve these exact challenges so that more data scientists can ship more models to production — and ultimately change the world!

Join us on Slack to learn more about shipping models to production